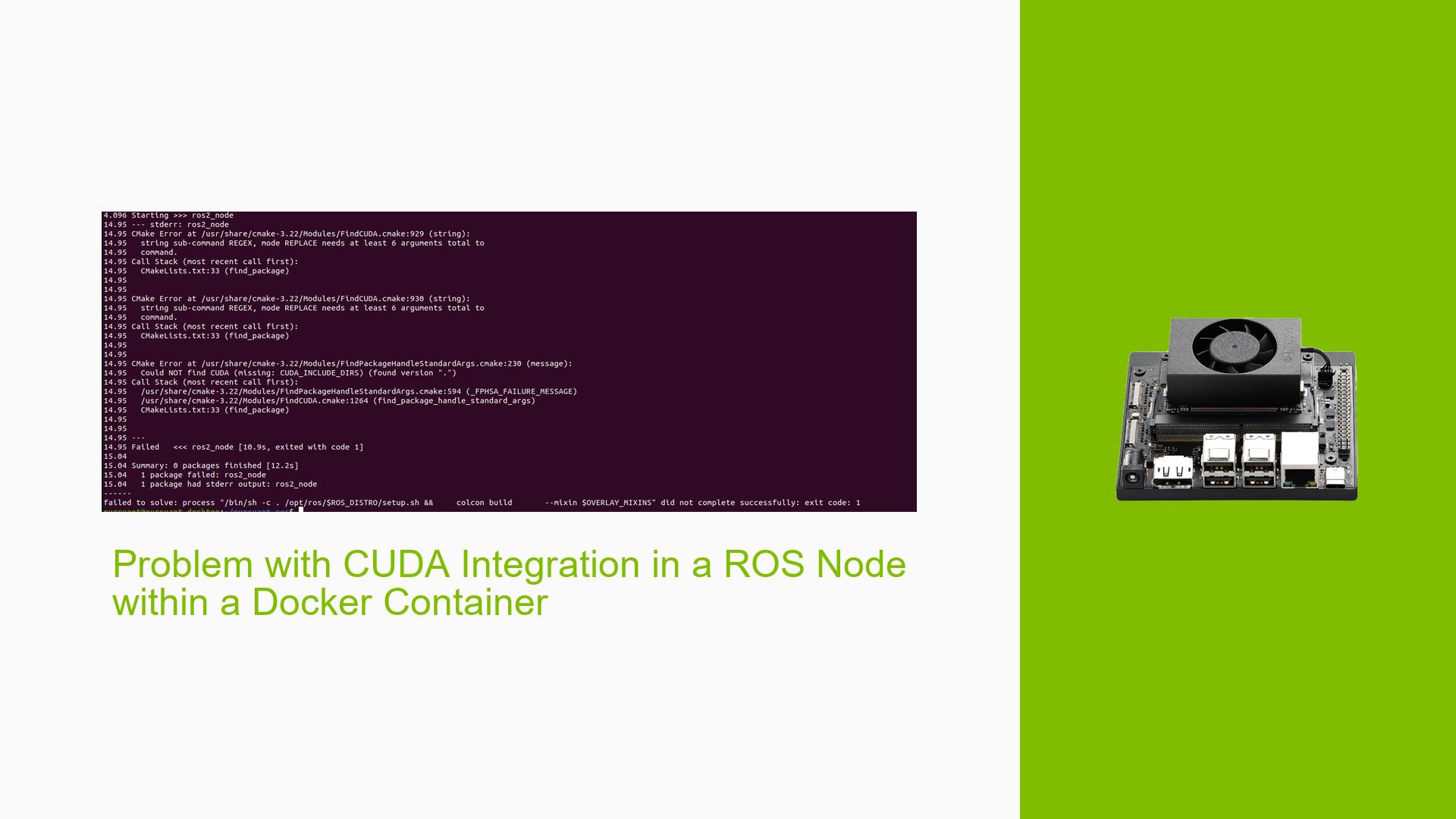

Problem with CUDA Integration in a ROS Node within a Docker Container

Issue Overview

Users are experiencing difficulties integrating CUDA within a ROS node that runs inside a Docker container. The primary symptom is an error encountered during the CMake configuration process, specifically at the line find_package(CUDA REQUIRED), which indicates that CUDA is not being installed or recognized correctly.

The issue arises during the build time of the Docker container, where the user attempts to compile a ROS node that utilizes both CUDA and PyTorch. The Dockerfile provided includes steps for installing CUDA, but despite these efforts, the CMake process fails to locate the necessary CUDA components.

Specific Symptoms

- Error message indicating CUDA is not found during CMake configuration.

- Successful integration of other libraries (like OpenCV) in similar setups, suggesting that the issue is isolated to CUDA.

Context

- The problem occurs during the setup of a ROS node that requires CUDA and PyTorch.

- The user has provided a detailed Dockerfile and CMakeLists.txt, indicating an attempt to set environment variables for CUDA.

Hardware and Software Specifications

- The base image used in the Dockerfile is

ros:iron. - CUDA version 12.5 is being installed.

- The user is on an ARM64 architecture (implied by the use of

cuda-tegra-repo).

Frequency and Impact

- This issue appears to be consistent for the user, as they cannot proceed with building their ROS node.

- The inability to integrate CUDA significantly hampers development efforts related to machine learning tasks within ROS.

Possible Causes

-

Hardware Incompatibilities: If the hardware does not support the specific version of CUDA being installed, it may lead to detection issues.

-

Software Bugs or Conflicts: There may be bugs in either the Docker image or in how CUDA interacts with other libraries within ROS.

-

Configuration Errors: Incorrect paths or environment variables in the Dockerfile or CMakeLists.txt could prevent proper detection of CUDA.

-

Driver Issues: Missing or improperly configured NVIDIA drivers could lead to problems with accessing GPU resources.

-

Environmental Factors: Insufficient permissions or incorrect runtime configurations (e.g., not using

--runtime nvidia) may inhibit access to GPU capabilities. -

User Errors: Misconfigurations in Docker setup or CMakeLists.txt could lead to failures in finding CUDA.

Troubleshooting Steps, Solutions & Fixes

-

Verify NVIDIA Driver Installation:

- Ensure that NVIDIA drivers are correctly installed on your host system. Use:

nvidia-smi - This command should display GPU information; if it doesn’t, reinstall drivers.

- Ensure that NVIDIA drivers are correctly installed on your host system. Use:

-

Install NVIDIA Container Toolkit:

- Make sure you have installed the NVIDIA Container Toolkit, which allows Docker containers to utilize GPU resources:

sudo apt-get install nvidia-container-toolkit - Launch your container with:

docker run --gpus all --runtime=nvidia <image_name>

- Make sure you have installed the NVIDIA Container Toolkit, which allows Docker containers to utilize GPU resources:

-

Check Environment Variables:

- Confirm that all relevant environment variables are set correctly in your Dockerfile:

ENV CUDA_TOOLKIT_ROOT_DIR=/usr/local/cuda-12.5 ENV PATH=/usr/local/cuda-12.5/bin:$PATH ENV LD_LIBRARY_PATH=/usr/local/cuda-12.5/lib64:$LD_LIBRARY_PATH

- Confirm that all relevant environment variables are set correctly in your Dockerfile:

-

Modify CMakeLists.txt:

- Ensure that the paths specified in your CMakeLists.txt are correct and match those set in your Dockerfile:

set(CMAKE_CUDA_COMPILER ${CUDA_TOOLKIT_ROOT_DIR}/bin/nvcc) find_package(CUDA REQUIRED)

- Ensure that the paths specified in your CMakeLists.txt are correct and match those set in your Dockerfile:

-

Test with Different Base Images:

- Consider switching to a different base image that is known to work well with ROS and CUDA, such as those provided by NVIDIA Jetson containers:

FROM dusty-nv/jetson-containers:ros

- Consider switching to a different base image that is known to work well with ROS and CUDA, such as those provided by NVIDIA Jetson containers:

-

Rebuild Your Docker Image:

- After making changes, ensure you rebuild your Docker image completely to apply all updates:

docker build --no-cache -t <image_name> .

- After making changes, ensure you rebuild your Docker image completely to apply all updates:

-

Check for Additional Dependencies:

- Ensure all dependencies required by both ROS and CUDA are installed properly within your Docker container.

-

Consult Documentation and Community Resources:

- Refer to NVIDIA’s official documentation for installing CUDA and integrating it with ROS for any additional steps or updates.

Recommended Approach

Multiple users have suggested ensuring that the NVIDIA Container Toolkit is properly installed and that containers are launched with GPU access enabled (--runtime=nvidia). This approach has been successful for others facing similar issues.

Unresolved Aspects

Further investigation may be needed into specific compatibility issues between the versions of ROS, CUDA, and PyTorch being used, as well as potential conflicts arising from other installed libraries within the Docker container.