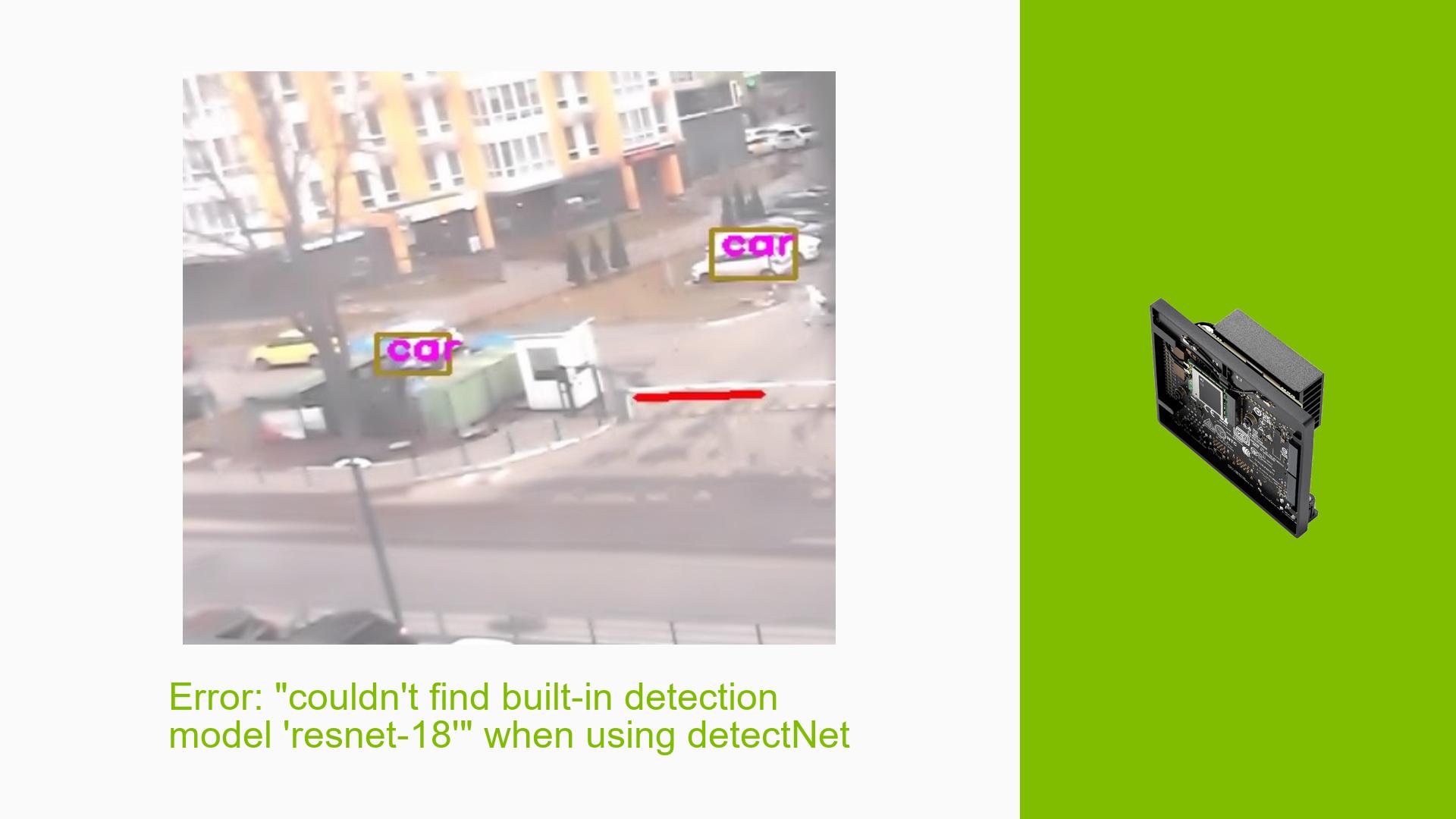

Error: “couldn’t find built-in detection model ‘resnet-18′” when using detectNet

Issue Overview

Users are encountering an error message "[TRT] couldn’t find built-in detection model ‘resnet-18’" when attempting to use the resnet-18 model with the detectNet function in the Jetson Inference library. This error occurs specifically when trying to use networks other than the default "ssd-mobilenet-v2" for object detection tasks. The issue appears to be related to a misunderstanding of which models are suitable for different AI tasks, particularly the distinction between classification and detection models.

Possible Causes

-

Incorrect model selection: The resnet-18 model is designed for image classification tasks, not object detection.

-

Incomplete model installation: The required models may not have been fully downloaded or installed during the initial setup process.

-

Misuse of API: Attempting to use a classification model (resnet-18) with a detection function (detectNet) is causing the error.

-

Configuration issues: The system may not be properly configured to locate or use certain models.

Troubleshooting Steps, Solutions & Fixes

-

Verify model compatibility:

- Understand that resnet-18 is a classification model, not a detection model.

- Use resnet-18 with imagenet/imagenet.py for classification tasks.

- For object detection, use appropriate models like "ssd-mobilenet-v2".

-

Check model installation:

- Review the model download process during initial setup.

- Ensure all required models are selected and properly downloaded.

- To verify, check the following directories:

/usr/local/bin/networks /usr/local/bin/data

-

Use the correct API for the task:

- For object detection, use:

jetson_inference.detectNet("ssd-mobilenet-v2", threshold=0.5) - For image classification with resnet-18, use:

jetson_inference.imageNet("resnet-18")

- For object detection, use:

-

Reinstall or update Jetson Inference:

- If issues persist, consider reinstalling or updating the Jetson Inference library.

- Follow the official documentation for the installation process.

-

Verify model availability:

- Use the following command to list available models:

jetson-inference --network-type=all - Ensure the desired model is listed and properly installed.

- Use the following command to list available models:

-

Check system logs:

- Examine system logs for any additional error messages or clues:

sudo journalctl -xe

- Examine system logs for any additional error messages or clues:

-

Update CUDA and TensorRT:

- Ensure you have the latest compatible versions of CUDA and TensorRT installed.

- Check the Jetson Inference documentation for version compatibility.

-

Seek community support:

- If the issue persists, consider posting a detailed description of your problem, including your code and system configuration, on the NVIDIA Developer Forums or GitHub issues for the Jetson Inference project.

By following these steps, users should be able to resolve the error and correctly use the appropriate models for their specific AI tasks on the Jetson platform.