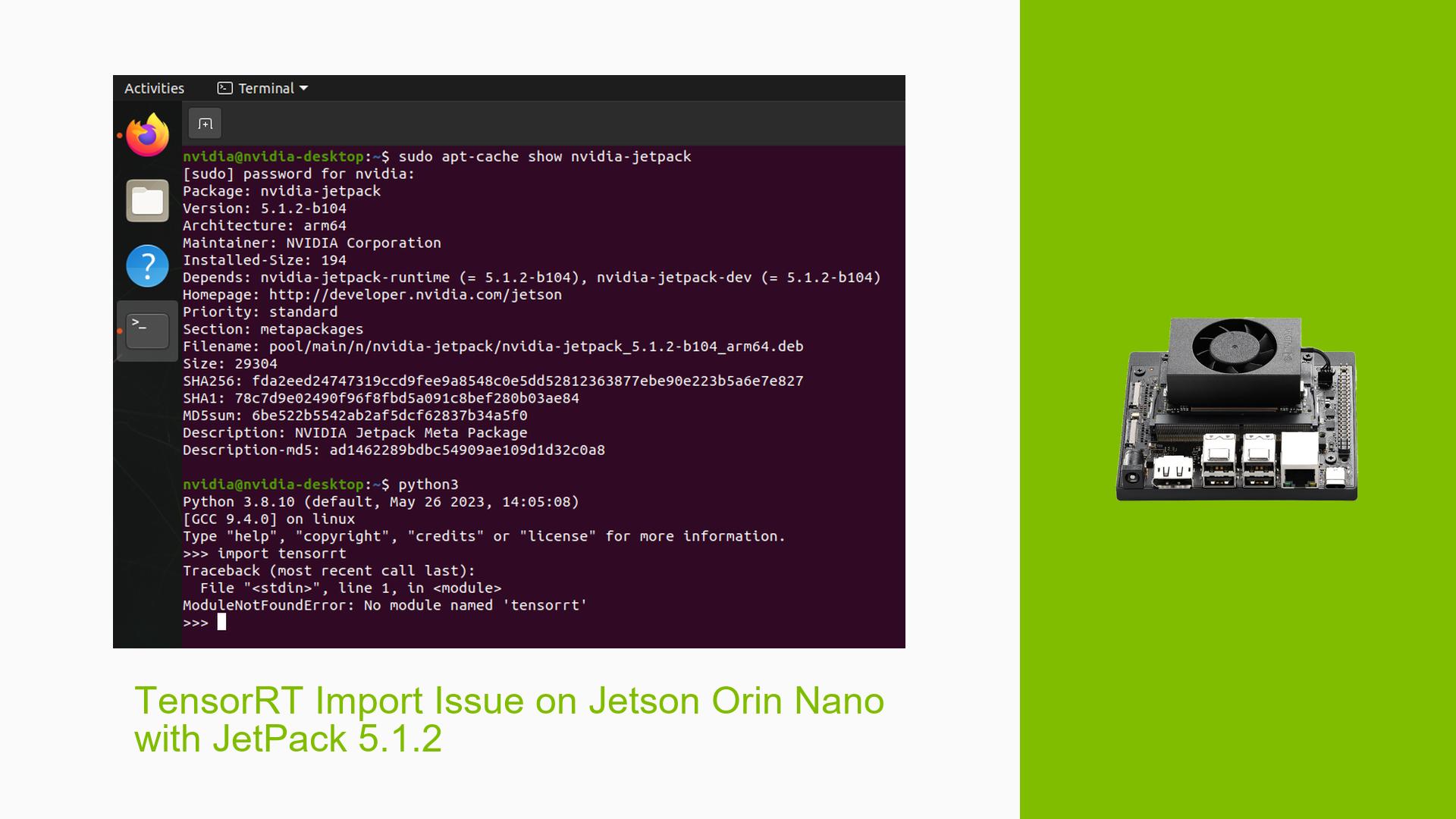

TensorRT Import Issue on Jetson Orin Nano with JetPack 5.1.2

Issue Overview

Users are experiencing difficulties importing TensorRT on the Nvidia Jetson Orin Nano Dev board running JetPack 5.1.2. The specific symptoms include:

- Inability to import TensorRT in Python

- Error message indicating that TensorRT is not found

- Discrepancy between JetPack documentation stating TensorRT 8.5.2 inclusion and actual import failure

- The issue occurs after a fresh installation of the Jetson Orin Nano image on a microSD card

- JetPack version reported as 5.1.2-b104

This problem impacts the user’s ability to utilize TensorRT for deep learning inference on the Jetson platform, potentially hindering development and deployment of AI applications.

Possible Causes

-

Incomplete JetPack Installation: The TensorRT package may not have been properly installed during the JetPack setup process.

-

Environment Configuration Issues: Python environment variables or paths may not be correctly set to include TensorRT.

-

Version Mismatch: There could be a mismatch between the installed TensorRT version and the Python environment.

-

Corrupted Installation: The installation files for TensorRT might be corrupted or incomplete.

-

System Updates Required: The system may require updates or additional packages to properly recognize and use TensorRT.

-

Hardware-Specific Problem: There might be a hardware-specific issue with certain Jetson Orin Nano units affecting TensorRT functionality.

Troubleshooting Steps, Solutions & Fixes

-

Verify JetPack Installation:

- Confirm the JetPack version by running:

cat /etc/nv_tegra_release - Ensure it matches the expected version (5.1.2 or later).

- Confirm the JetPack version by running:

-

Check TensorRT Installation:

- Attempt to import TensorRT in Python:

import tensorrt print(tensorrt.__version__) - If successful, it should display the TensorRT version (e.g., 8.5.2.2).

- Attempt to import TensorRT in Python:

-

Update the System:

- Run the following commands to update the system:

sudo apt update sudo apt upgrade - Reboot the system after updates.

- Run the following commands to update the system:

-

Reinstall TensorRT:

- If TensorRT is not found, try reinstalling it:

sudo apt install python3-libnvinfer

- If TensorRT is not found, try reinstalling it:

-

Check Python Environment:

- Verify the Python version:

python3 --version - Ensure it’s compatible with the installed TensorRT version.

- Verify the Python version:

-

Inspect System Logs:

- Check for any error messages related to TensorRT:

dmesg | grep -i tensorrt

- Check for any error messages related to TensorRT:

-

Utilize NVIDIA Samples:

- Clone and run NVIDIA’s sample repositories to test TensorRT functionality:

git clone https://github.com/dusty-nv/jetson-inference.git cd jetson-inference mkdir build cd build cmake .. make -j$(nproc) sudo make install sudo ldconfig

- Clone and run NVIDIA’s sample repositories to test TensorRT functionality:

-

Consider Alternative TensorRT Usage:

- If direct import fails, consider using the torch2trt converter:

git clone https://github.com/NVIDIA-AI-IOT/torch2trt.git cd torch2trt sudo python3 setup.py install

- If direct import fails, consider using the torch2trt converter:

-

Contact NVIDIA Support:

- If the issue persists, reach out to NVIDIA’s official support channels or community forums for Jetson-specific assistance.

-

Perform a Clean Installation:

- As a last resort, consider performing a clean installation of the Jetson Orin Nano image, ensuring all steps in the official guide are followed meticulously.

Remember to document any error messages or unexpected behavior encountered during these troubleshooting steps, as they may provide valuable information for further diagnosis.