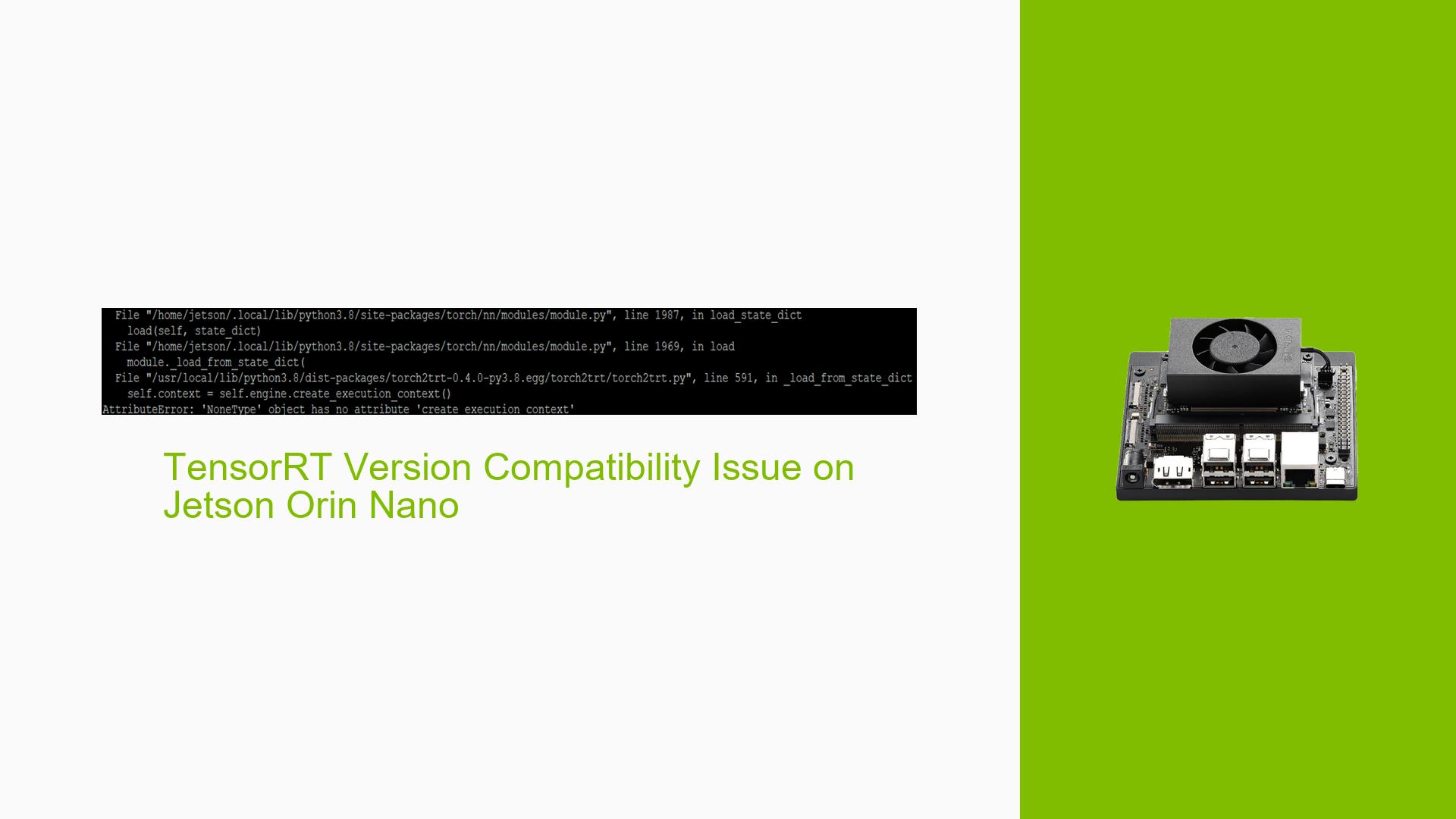

TensorRT Version Compatibility Issue on Jetson Orin Nano

Issue Overview

Users are experiencing compatibility issues when attempting to run TensorRT models on the Jetson Orin Nano that were originally converted on a Jetson Nano. The problem manifests as an error message indicating that the engine file is not compatible with the current platform. This issue occurs during the execution of TensorRT models and impacts the ability to run pre-converted models across different Jetson platforms.

Possible Causes

-

Hardware architecture differences: The Jetson Orin Nano and Jetson Nano have different System-on-Chip (SoC) architectures, which can lead to incompatibilities in optimized TensorRT engine files.

-

TensorRT version mismatch: Different versions of TensorRT may have been used on the Jetson Nano and Orin Nano, potentially causing compatibility issues.

-

Platform-specific optimizations: TensorRT optimizes models for specific hardware, creating engine files that are not portable across different Jetson platforms.

Troubleshooting Steps, Solutions & Fixes

-

Re-generate the TensorRT engine file:

- The primary solution is to re-generate the TensorRT engine file directly on the Jetson Orin Nano.

- This ensures that the engine file is optimized for the specific hardware of the Orin Nano.

-

Check TensorRT versions:

- Verify that you are using compatible TensorRT versions on both the Jetson Nano (where the model was originally converted) and the Orin Nano.

- Update TensorRT to the latest version supported by your Jetson Orin Nano if necessary.

-

Use platform-agnostic model formats:

- Instead of transferring pre-built TensorRT engine files, consider using more portable model formats like ONNX.

- Convert the ONNX model to a TensorRT engine on the target Orin Nano platform.

-

Review JetPack versions:

- Ensure that you are using compatible JetPack versions on both devices, as JetPack includes specific versions of TensorRT and other AI libraries.

-

Document hardware-specific builds:

- When working with multiple Jetson platforms, clearly label and document which TensorRT engine files are built for specific hardware to avoid confusion.

-

Implement a model conversion pipeline:

- Develop a workflow that includes model conversion as part of your deployment process on each target platform.

- This ensures that you always have the correct, optimized engine file for each specific Jetson device.

-

Error analysis:

- If you encounter the error "Engine deserialization failed: Unable to deserialize engine. Engine file was not built against this version of TensorRT, or the engine is not compatible with this platform," it’s a clear indication that you need to rebuild the engine on the target platform.

By following these steps, you should be able to resolve the TensorRT compatibility issue and successfully run your models on the Jetson Orin Nano. Remember that TensorRT engine files are hardware-specific and should always be generated on the target platform for optimal performance and compatibility.