TensorRT Small Model High RAM Consumption During Inference on Nvidia Jetson Orin Nano

Issue Overview

Users are experiencing unexpectedly high RAM consumption when loading a small TensorRT model for inference on the Nvidia Jetson Orin Nano development board. The converted model, which is only about 4MB in size at rest, consumes approximately 1.1GB of system RAM (CPU+GPU) when loaded. This issue is particularly puzzling as the same model runs on a Jetson TX2 device using only about 70MB of RAM.

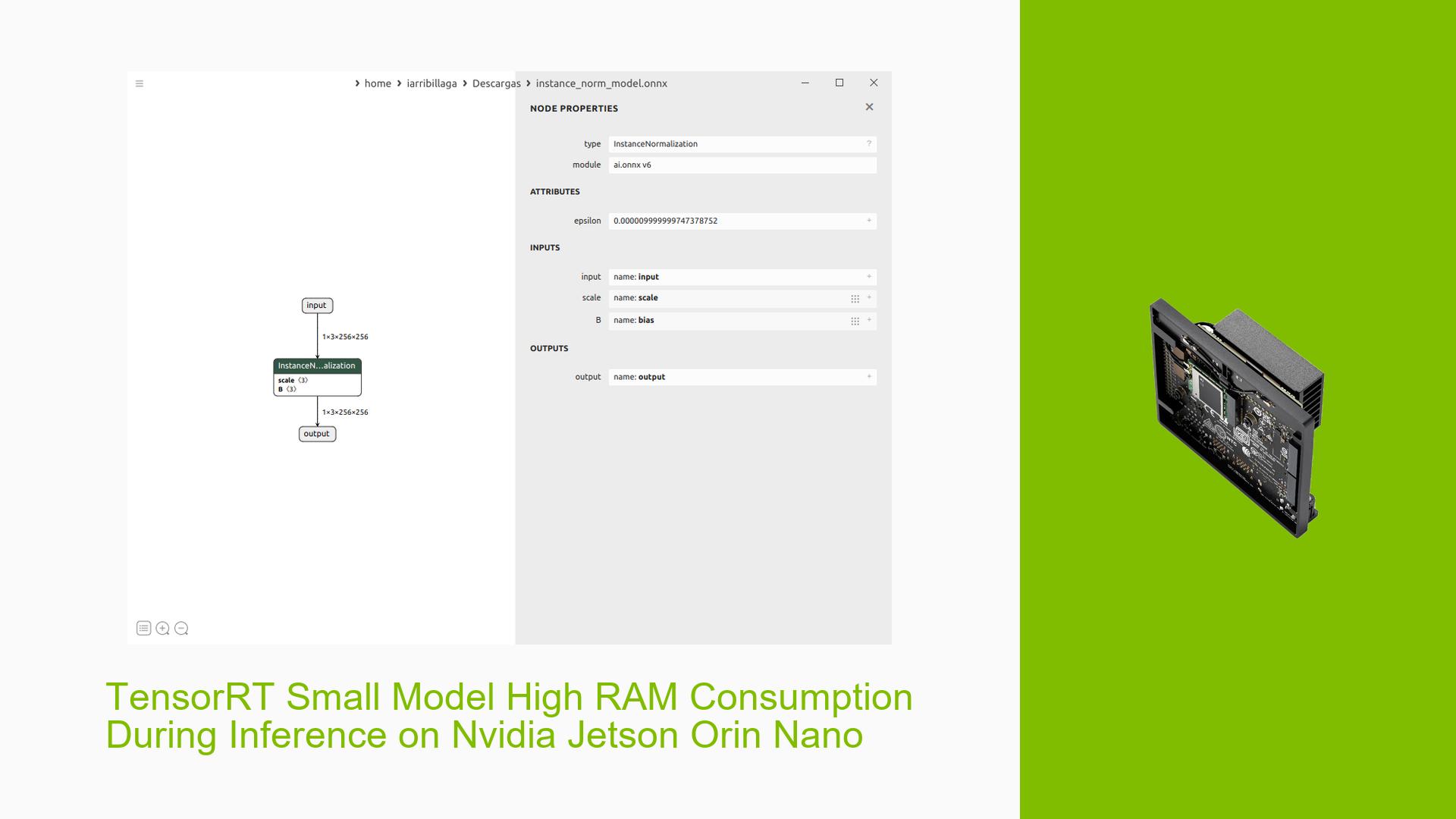

The problem occurs specifically with models containing InstanceNormalization layers, which appear to be not correctly supported in TensorRT version 8.5.2 used on the Jetson Orin Nano. Even a simple test model with just one InstanceNormalization layer consumes 940MB of RAM when loaded for inference, despite being only 2.4KB on disk.

Possible Causes

-

TensorRT Version Incompatibility: The issue may be related to the specific version of TensorRT (8.5.2) used on the Jetson Orin Nano, which might not properly support InstanceNormalization layers.

-

cuDNN Initialization: The high memory usage could be related to cuDNN and/or cuBLAS initialization, as indicated by the log message during model loading.

-

CUDA Binary Loading: The memory increase might be due to loading CUDA-related binaries, which can take over 600MB.

-

InstanceNormalization Plugin Implementation: The InstanceNormalization plugin implementation in TensorRT 8.5.2 may require cuDNN, potentially causing large memory allocation.

-

JetPack Version Limitations: The current JetPack version (5.1.3) may have limitations or bugs related to memory management for certain model architectures.

Troubleshooting Steps, Solutions & Fixes

-

Upgrade JetPack and CUDA:

- While a full upgrade to the latest JetPack is not possible, consider upgrading CUDA to version 11.8, which introduces lazy loading to avoid unnecessary binary loading during initialization.

- To upgrade CUDA without reflashing, use the NVIDIA Developer CUDA Toolkit 11.8 Downloads.

-

Disable cuDNN Dependencies:

- Try converting the model by disabling the CUDNN tactic source using the TensorRT Python API.

- Use the following code snippet when creating the builder config:

config = builder.create_builder_config() config.set_tactic_sources(1 << int(trt.TacticSource.CUBLAS)) -

Use Custom Allocator:

- Implement a custom allocator to track memory allocation requests and potentially optimize memory usage.

- Example skeleton for a custom allocator:

class CustomAllocator(trt.IGpuAllocator): def allocate(self, size, alignment, flags): # Implement allocation logic here pass def free(self, memory): # Implement deallocation logic here pass # Use the custom allocator when creating the runtime runtime = trt.Runtime(CustomAllocator()) -

Limit Workspace Size:

- Set a maximum workspace size to limit RAM usage during inference.

- Use the following code when creating the builder config:

config.set_memory_pool_limit(trt.MemoryPoolType.WORKSPACE, 1 << 20) # Set 1 MiB limit -

Investigate Alternative Layer Implementations:

- Consider replacing InstanceNormalization layers with alternative implementations that might be better supported in TensorRT 8.5.2.

- Explore the possibility of using other normalization techniques that are fully supported in the current TensorRT version.

-

Enable Verbose Logging:

- When converting the model to TensorRT, enable verbose logging to gather more information about the conversion process and potential issues.

- Use the following code to enable verbose logging:

trt.Logger(trt.Logger.VERBOSE) -

Explore TensorRT Optimization Techniques:

- Investigate TensorRT’s layer and tensor fusion optimizations to potentially reduce memory usage.

- Experiment with different precision modes (FP32, FP16, INT8) to find a balance between accuracy and memory consumption.

-

Monitor System Resources:

- Use system monitoring tools like

nvidia-smiandtopto track GPU and CPU memory usage during model loading and inference. - Look for any unexpected memory allocations or leaks.

- Use system monitoring tools like

-

Consult NVIDIA Developer Forums:

- If the issue persists, consider posting detailed logs and model information on the NVIDIA Developer Forums for further assistance from the TensorRT team.

Remember to thoroughly test any changes or workarounds to ensure they don’t negatively impact model performance or accuracy. If possible, consider upgrading to a newer version of TensorRT (8.6.1 or later) which has improved support for InstanceNormalization layers.