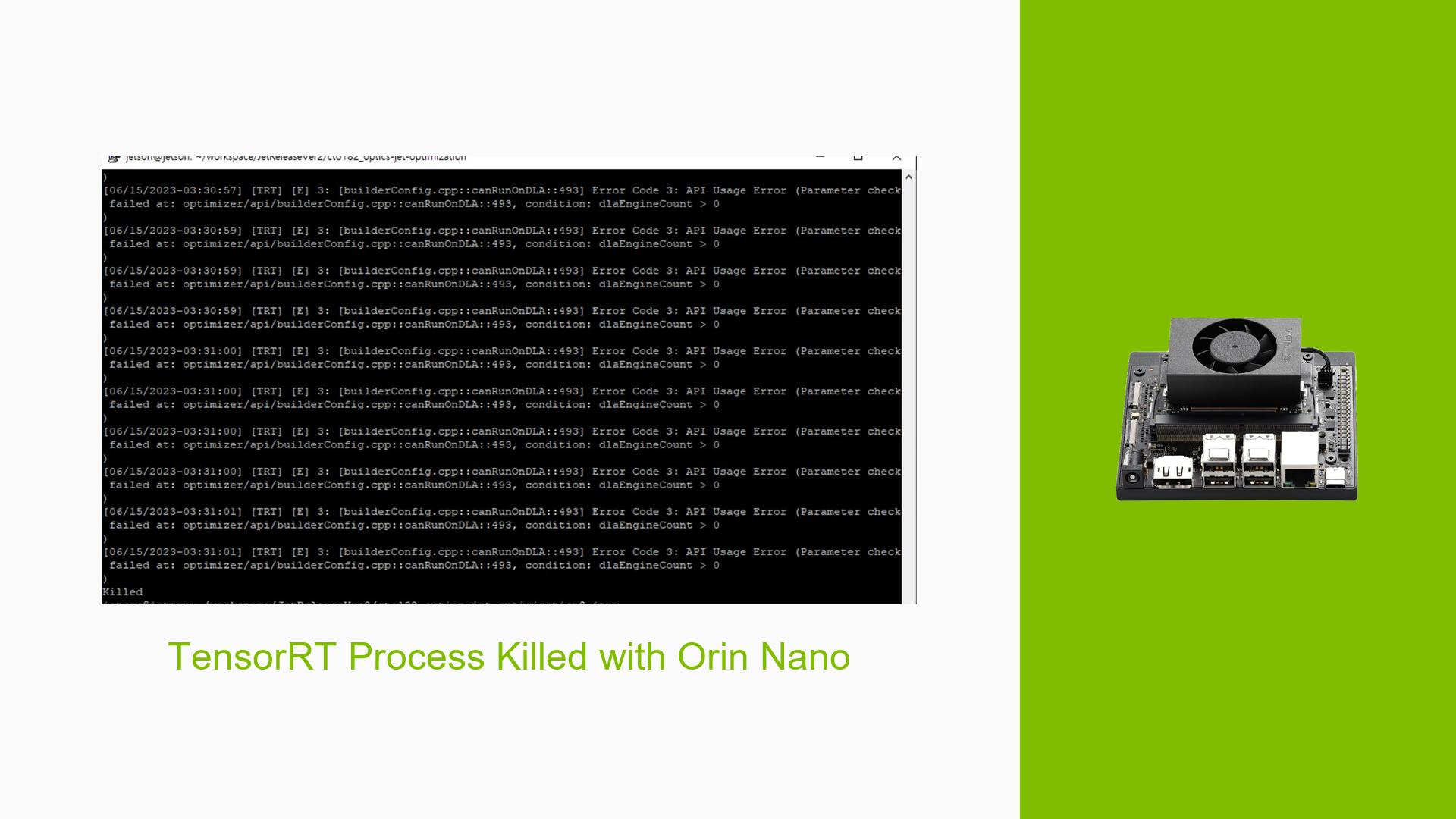

TensorRT Process Killed with Orin Nano

Issue Overview

Users are experiencing issues when converting PyTorch models to TensorRT on the Nvidia Jetson Orin Nano Developer Kit. The specific symptoms include the conversion process being abruptly terminated (killed) without clear error messages, despite successfully generating output that shows improved inferencing times. The issue arises during the model conversion phase, particularly when using the torch2trt utility with the options dla=True and max_workspace_size=1GB.

The context of the problem is primarily related to memory constraints, as users have reported that while the conversion works on the Jetson Nano (with 3.2 GB of available RAM), it fails on the Orin Nano, which has only 2.2 GB available in CLI mode. This discrepancy leads to concerns about out-of-memory errors during the conversion process.

The hardware specifications noted include:

- Device: Jetson Orin Nano (4GB)

- Test Model: DenseNet121

- JetPack Version: 5.1.1

The impact of this problem is significant as it hampers users’ ability to optimize their models for deployment, thereby affecting overall system functionality and user experience.

Possible Causes

- Hardware Incompatibilities: The Jetson Orin Nano does not support DLA hardware, which may lead to issues when attempting to use DLA for model conversion.

- Memory Constraints: The available RAM during operations is lower on the Orin Nano compared to other models, leading to potential out-of-memory errors during TensorRT conversion.

- Configuration Errors: Incorrect settings in

torch2trt, such as enabling DLA when it is unsupported, can result in process termination. - Driver Issues: Potential bugs or conflicts in the drivers associated with TensorRT or PyTorch may contribute to instability.

- Environmental Factors: Insufficient swap memory or high memory usage by other processes could exacerbate memory constraints.

- User Misconfigurations: Users may inadvertently set parameters that lead to excessive memory demands during conversion.

Troubleshooting Steps, Solutions & Fixes

-

Verify Tool Usage:

- Ensure you are using the correct tool for model conversion (e.g.,

torch2trt). - Confirm that you are not attempting to use DLA if it is unsupported on your device.

- Ensure you are using the correct tool for model conversion (e.g.,

-

Adjust Configuration Settings:

- Set

dla=Falsein your conversion command to avoid attempting DLA usage. - Decrease the batch size from 8 to 4 to reduce memory usage during inference.

- Set

-

Increase Available Memory:

- Consider adding swap memory to your system:

sudo fallocate -l 1G /swapfile sudo chmod 600 /swapfile sudo mkswap /swapfile sudo swapon /swapfile - Monitor available RAM using

free -hor similar commands before and after adjustments.

- Consider adding swap memory to your system:

-

Optimize Model Parameters:

- Experiment with different values for

max_workspace_size, starting with smaller sizes like 512MB. - Use a smaller model if possible or simplify your current model architecture.

- Experiment with different values for

-

Use ONNX Format:

- Convert your PyTorch model to ONNX format first using:

torch.onnx.export(model, dummy_input, "model.onnx") - Then convert the ONNX model to TensorRT using

trtexecwith options that exclude cuDNN:trtexec --onnx=model.onnx --tacticSources=-CUDNN

- Convert your PyTorch model to ONNX format first using:

-

Monitor System Logs:

- Check system logs for any additional error messages that could provide insight into why processes are being killed.

- Use commands like

dmesgor check/var/log/syslog.

-

Best Practices for Future Prevention:

- Regularly update your JetPack and TensorRT versions to benefit from bug fixes and performance improvements.

- Keep track of memory usage and system load while performing intensive tasks.

-

Community Support:

- Engage with community forums for shared experiences and solutions; other users may have encountered similar issues and found effective workarounds.

By following these steps, users can effectively diagnose and potentially resolve issues related to TensorRT conversions on the Nvidia Jetson Orin Nano Developer Kit, enhancing their development experience and optimizing their applications.