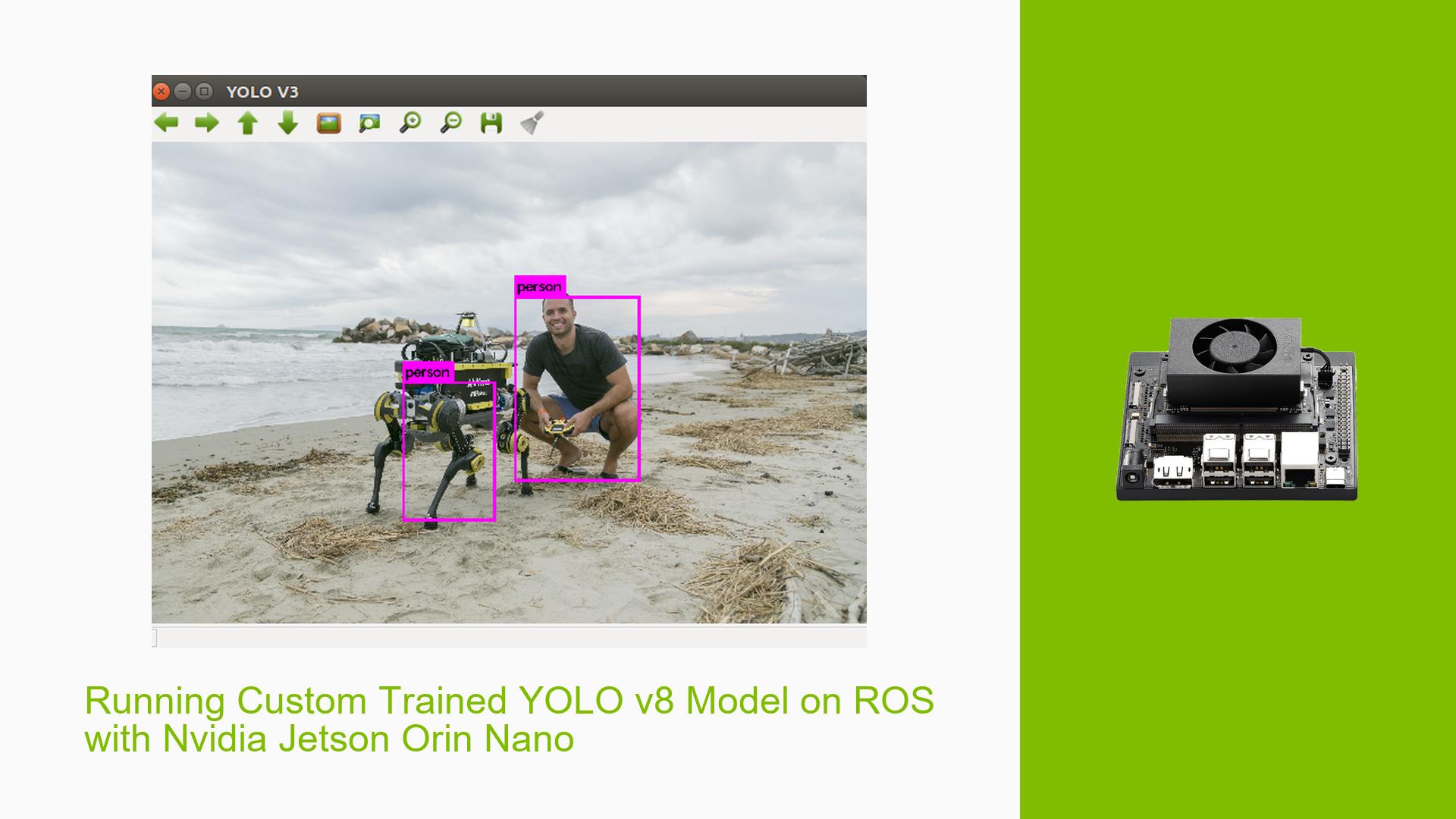

Running Custom Trained YOLO v8 Model on ROS with Nvidia Jetson Orin Nano

Issue Overview

Users are experiencing challenges when attempting to run a custom trained YOLO v8 model on the Nvidia Jetson Orin Nano Dev board within the Robot Operating System (ROS). The main symptoms include difficulties in integrating the YOLO v8 model into ROS and uncertainty about which files need modification to facilitate this integration.

This issue typically arises during the setup phase, specifically when users are trying to adapt existing YOLO implementations (like YOLOv4 or YOLOv5) for their custom-trained models. Users have reported varying degrees of success, with some finding it challenging to locate relevant documentation or examples tailored for YOLO v8. The impact of this problem significantly affects user experience, as it hinders the deployment of object detection capabilities in robotic applications.

Possible Causes

-

Hardware Incompatibilities: The Jetson Orin Nano may have specific requirements or limitations that could affect performance or compatibility with certain models.

-

Software Bugs or Conflicts: Issues may arise from software versions that are not compatible with each other, particularly between ROS and TensorRT.

-

Configuration Errors: Incorrect settings in ROS or the model configuration files could lead to failures in running the model.

-

Driver Issues: Outdated or improperly installed drivers for CUDA or TensorRT could cause performance problems.

-

Environmental Factors: Power supply issues or overheating could affect the performance of the Jetson board.

-

User Errors or Misconfigurations: Users may not be familiar with creating ROS nodes or modifying existing codebases effectively.

Troubleshooting Steps, Solutions & Fixes

-

Diagnosing the Problem:

- Ensure that your Jetson Orin Nano is updated with the latest software and drivers.

- Verify that all dependencies for ROS and your model are correctly installed. Use commands like:

sudo apt-get update sudo apt-get upgrade

-

Gathering System Information:

- Check system specifications and installed packages:

dpkg -l | grep ros nvcc --version

- Check system specifications and installed packages:

-

Isolating the Issue:

- Test with a known working YOLO implementation (e.g., YOLOv4) to confirm that your setup is functional.

- Create a simple ROS node using basic functionality to ensure that ROS is set up correctly.

-

Modifying Existing Code:

- For integrating YOLO v8, identify key files in existing projects (like those mentioned in the forum) where modifications are needed. This typically includes:

- The main node file where inference is called.

- Configuration files that specify model parameters.

- Follow examples from repositories that successfully implement YOLO models in ROS, such as:

- For integrating YOLO v8, identify key files in existing projects (like those mentioned in the forum) where modifications are needed. This typically includes:

-

Potential Fixes:

- If you encounter errors related to TensorRT, consider updating TensorRT to its latest version compatible with your Jetson board.

- Create a ROS node around your YOLO v8 inferencing code if existing projects do not meet your needs. Refer to the ROS documentation for guidance on creating nodes.

-

Best Practices:

- Regularly check for updates in both ROS and TensorRT to avoid compatibility issues.

- Document any changes made during integration for future reference.

-

Further Investigation:

- If problems persist, consider reaching out to community forums or exploring additional GitHub projects related to YOLO and ROS for more tailored solutions.

By following these steps and utilizing community resources, users can effectively troubleshoot issues related to running custom-trained YOLO v8 models on the Nvidia Jetson Orin Nano within a ROS environment.