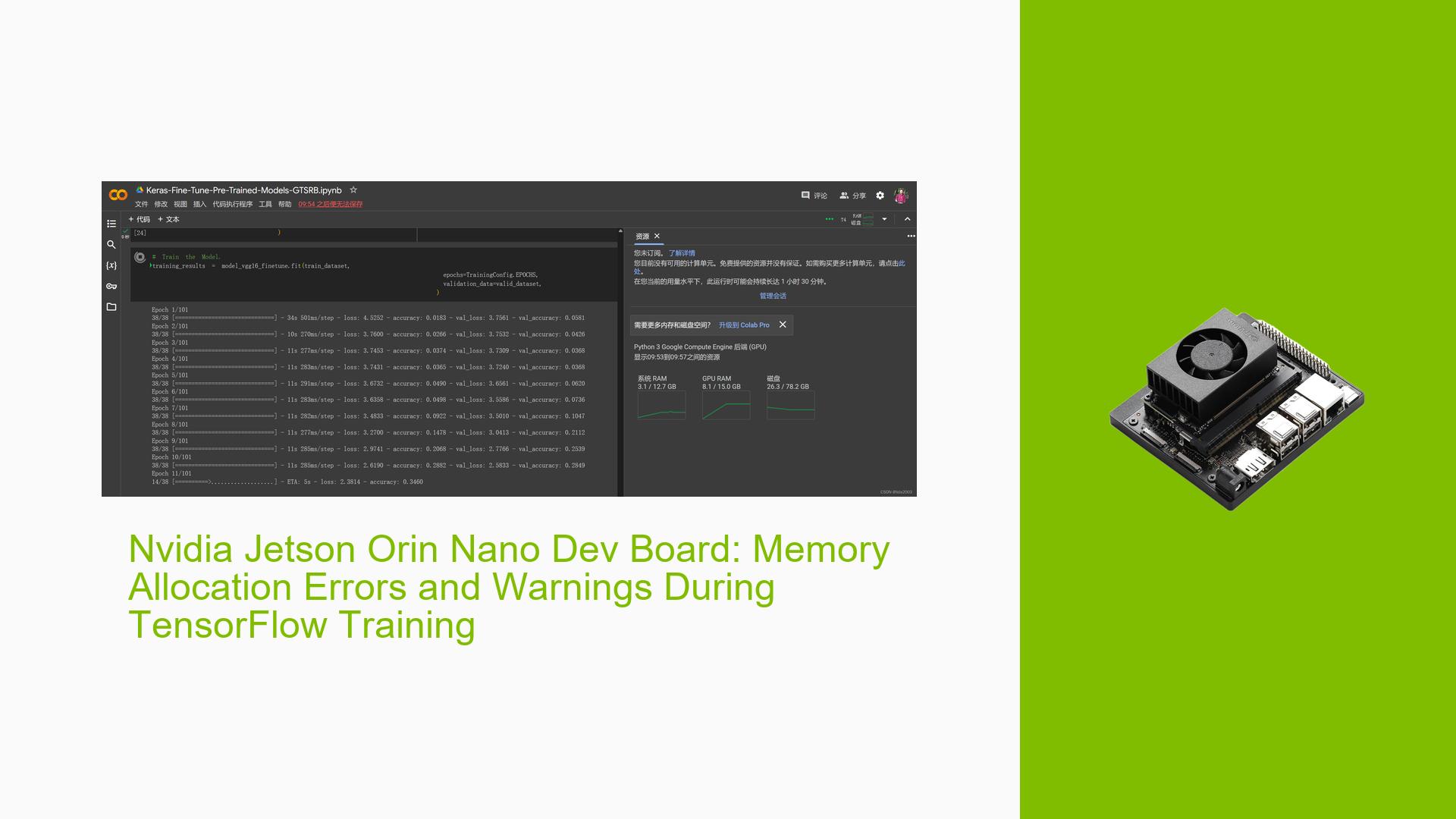

Nvidia Jetson Orin Nano Dev Board: Memory Allocation Errors and Warnings During TensorFlow Training

Issue Overview

Users have reported encountering various memory allocation errors and warnings when attempting to run TensorFlow training tasks on the Nvidia Jetson Orin Nano Dev Board. The issues occur after switching from the CPU version of TensorFlow (2.16.1) to the GPU version (2.15.0+nv24.03). Symptoms include out-of-memory errors, inability to register cuDNN/cuFFT/cuBLAS factories, and warnings related to NUMA node file access. These problems significantly impact the training performance and prevent successful completion of the training process.

Possible Causes

There are several potential causes for the memory allocation issues on the Jetson Orin Nano Dev Board:

-

Insufficient memory: The Jetson Orin Nano has a shared memory system where both CPU and GPU use the same 8GB memory. TensorFlow, being a memory-intensive library, may consume a significant portion of the available memory, leaving insufficient memory for the actual training task.

-

Incompatibility between TensorFlow versions: Switching from the CPU version (2.16.1) to the GPU version (2.15.0+nv24.03) of TensorFlow may introduce compatibility issues. The GPU version might have different memory requirements or utilize memory differently, leading to out-of-memory errors.

-

Incorrect batch size: The batch size used in the training script may be too large for the available memory on the Jetson Orin Nano. Large batch sizes can quickly exhaust the memory, resulting in out-of-memory errors.

-

NUMA node file access issues: Warnings related to the inability to open NUMA node files suggest that the kernel on the Jetson Orin Nano may have been built without NUMA support. This can lead to suboptimal memory allocation and performance.

-

Conflicting library registrations: Errors related to registering cuDNN, cuFFT, and cuBLAS factories indicate that there might be conflicts with previously registered factories. This could be due to incompatible library versions or improper installation.

Troubleshooting Steps, Solutions & Fixes

-

Verify TensorFlow compatibility: Ensure that the TensorFlow version being used is compatible with the Jetson Orin Nano Dev Board. Check the official documentation and release notes for any known issues or recommended versions specific to the Jetson platform.

-

Reduce batch size: Experiment with smaller batch sizes in the training script. Gradually decrease the batch size until the out-of-memory errors are resolved. This will allow the training to proceed within the available memory constraints.

-

Monitor memory usage: Use system monitoring tools like

nvidia-smito track GPU memory usage during training. This will provide insights into how much memory is being consumed and help identify any memory leaks or excessive usage. -

Optimize memory usage in the code: Review the training code and look for opportunities to optimize memory usage. This may include:

- Releasing unnecessary tensors and variables

- Using memory-efficient data types

- Implementing data generators or data streaming to load data in smaller batches

- Utilizing TensorFlow’s

tf.dataAPI for efficient data loading and processing

-

Update NVIDIA drivers and libraries: Ensure that the latest NVIDIA drivers and libraries (cuDNN, cuFFT, cuBLAS) are installed on the Jetson Orin Nano. Compatibility issues between TensorFlow and these libraries can lead to the observed errors.

-

Rebuild the kernel with NUMA support: If the warnings related to NUMA node file access persist, consider rebuilding the kernel on the Jetson Orin Nano with NUMA support enabled. This may help alleviate the memory allocation issues.

-

Consider alternative platforms: If the memory constraints on the Jetson Orin Nano prove to be a persistent limitation, consider using alternative platforms with more memory resources for training tasks. Cloud-based services like Google Colab or AWS EC2 instances with GPU support can provide the necessary memory and computational power.

It is worth underlining that the Jetson Orin Nano Dev Board is primarily designed for edge inference rather than training. While it is possible to perform training on the device, it may not be the most suitable platform for memory-intensive training workloads. Thorough testing and optimization are necessary to ensure successful training within the available memory constraints.