Is 16GB the Minimum Requirement to Run VLM on Jetson Orin Nano?

Issue Overview

Users are experiencing difficulties when attempting to run the VLM (Vision Language Model) on the 8GB Nvidia Jetson Orin Nano Dev board. The primary symptoms include:

- Model Loading Failure: The VLM model fails to load, resulting in a system freeze when the loading process is initiated.

- Extended Wait Time: Users reported waiting for over 20 minutes without any progress during the model loading phase.

- Context of the Issue: The problem occurs specifically during the setup of VLM, after following a troubleshooting guide that recommends adding swap memory.

The issue appears to be linked to hardware specifications, particularly the RAM capacity. The official documentation suggests that the 16GB version of the Jetson Nano Orin may be necessary for optimal performance. The frequency of this issue seems consistent among users attempting to run VLM on an 8GB configuration.

The impact on user experience is significant, as the inability to load the model prevents users from utilizing VLM functionalities, which may hinder development and testing processes.

Possible Causes

Several potential causes for this issue have been identified:

-

Memory Limitations: The VLM model may require more memory than what is available on an 8GB configuration, leading to loading failures.

-

Swap Configuration Issues: Although adding swap memory is suggested, improper configuration or insufficient total swap space may lead to system instability or freezing.

-

Software Bugs or Conflicts: There may be bugs in the VLM software that affect its compatibility with lower memory configurations.

-

Environmental Factors: Power supply issues or overheating could exacerbate performance problems, particularly when additional swap is utilized.

-

User Misconfiguration: Incorrectly setting up swap space or failing to follow recommended steps could lead to loading failures.

Troubleshooting Steps, Solutions & Fixes

To address the issues with running VLM on an 8GB Jetson Orin Nano, follow these comprehensive troubleshooting steps:

-

Verify Hardware Specifications:

- Ensure your Jetson Orin Nano is indeed the 8GB version.

-

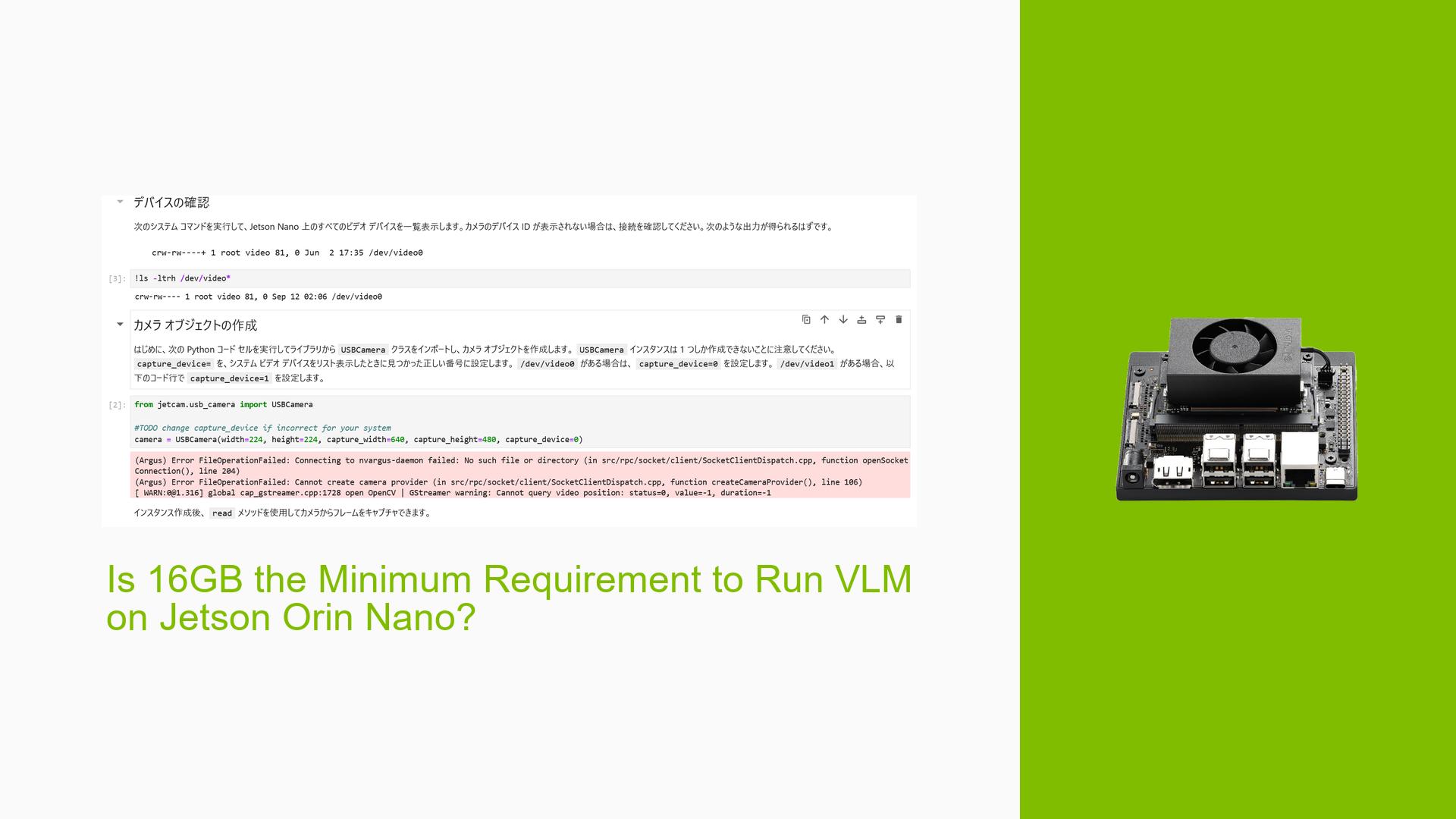

Check Swap Configuration:

- Confirm that swap space has been correctly added. You can check current swap settings using:

swapon --show - To add additional swap space, use:

sudo fallocate -l 8G /swapfile sudo chmod 600 /swapfile sudo mkswap /swapfile sudo swapon /swapfile

- Confirm that swap space has been correctly added. You can check current swap settings using:

-

Monitor System Resources:

- Use commands like

toporhtopto monitor CPU and memory usage while attempting to load the model.

- Use commands like

-

Test with Minimal Configuration:

- Try running VLM with minimal configurations or datasets to see if it loads successfully.

-

Consult Documentation:

- Review the official Nvidia documentation for any updates or notes regarding memory requirements for VLM:

Nvidia VLM Documentation

- Review the official Nvidia documentation for any updates or notes regarding memory requirements for VLM:

-

Consider Upgrading Hardware:

- If feasible, consider upgrading to a Jetson Orin Nano with at least 16GB of RAM, as some users suggest that this configuration is more stable for running VLM.

-

Engage with Community Support:

- If issues persist, consider posting on forums or reaching out for community support where similar experiences are shared.

Recommended Approach

Based on user feedback, it appears that achieving at least 16GB of physical memory (with additional swap) is crucial for successfully running VLM.

- A combination of 16GB physical memory, 8GB default swap, and an additional 8GB swap seems to be a successful configuration for some users.

Unresolved Aspects

Further investigation may be needed into:

- Specific software bugs related to lower memory configurations.

- Detailed performance metrics when using different swap configurations.

- Official Nvidia responses regarding minimum requirements for effective use of VLM on lower RAM devices.