Inconsistent TensorFlow Behavior on Nvidia Jetson Orin Nano

Issue Overview

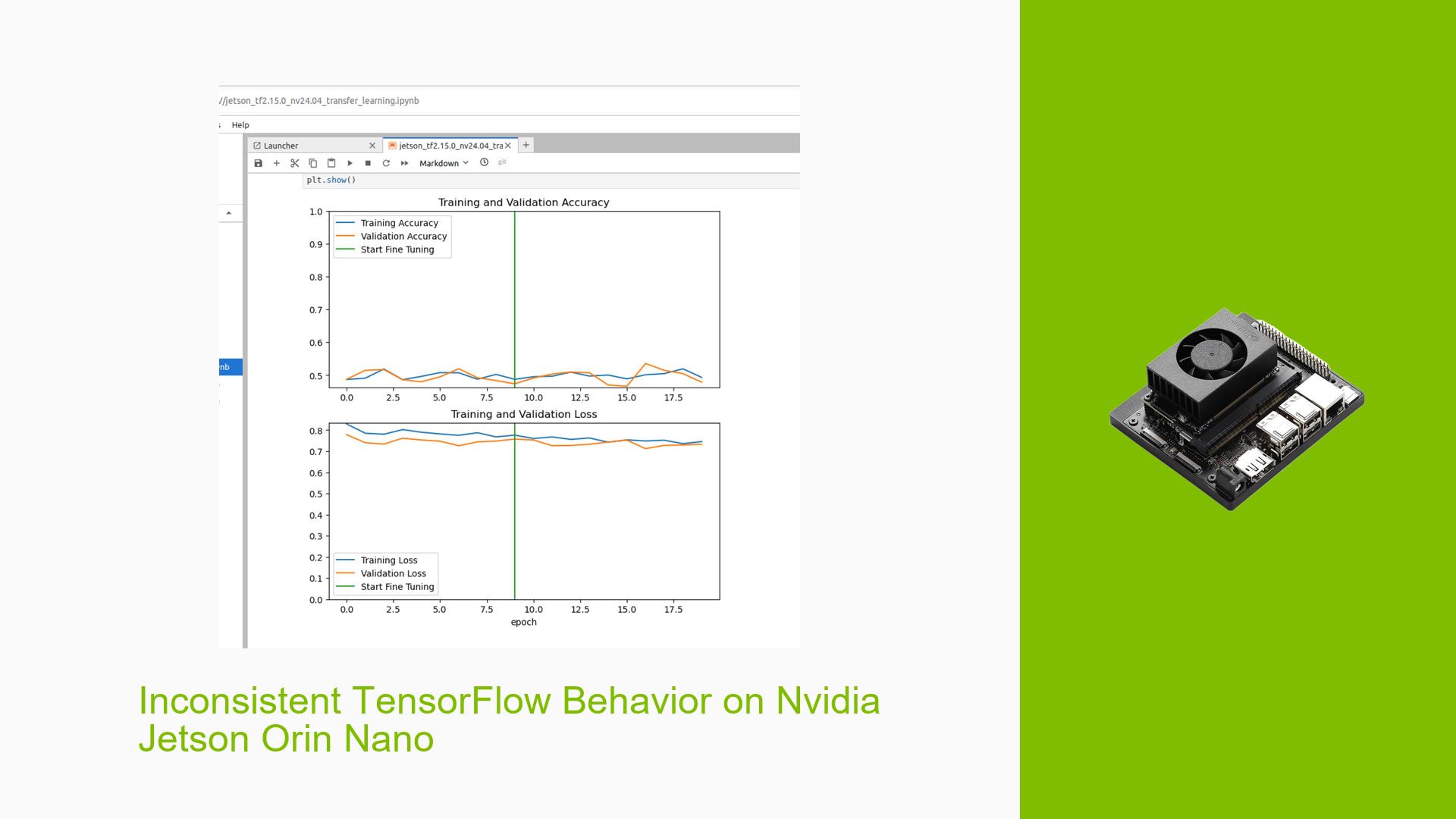

Users are experiencing inconsistent behavior when running TensorFlow code on the Nvidia Jetson Orin Nano Developer Board compared to Google Colab and the official TensorFlow documentation. The issue specifically relates to a transfer learning and fine-tuning example, where the prediction results on the Jetson Orin Nano are unexpected and differ from other platforms.

The problem occurs when using TensorFlow version 2.15.0+nv24.03 or 2.15.0+nv24.04 on the Jetson Orin Nano with JetPack 6.0 Developer Preview (DP). Users report that while the training process appears to work normally, the prediction phase produces strange results, with all output labels seemingly set to 1 (dog) regardless of the input.

Possible Causes

-

JetPack Version Incompatibility: The issue may be related to using JetPack 6.0 DP instead of the General Availability (GA) release.

-

TensorFlow Version Mismatch: The specific TensorFlow version (2.15.0+nv24.03 or 2.15.0+nv24.04) might have compatibility issues with the Jetson Orin Nano hardware or JetPack 6.0 DP.

-

Hardware Limitations: Some errors, such as out-of-memory (OOM) issues, may be due to the hardware limitations of the Orin Nano.

-

CUDA and cuDNN Compatibility: Warnings related to cuDNN, cuFFT, and cuBLAS suggest potential compatibility issues between TensorFlow and the CUDA libraries on the Jetson platform.

-

Model Architecture Mismatch: The unexpected prediction behavior could be due to a mismatch between the model architecture and the Jetson Orin Nano’s capabilities.

Troubleshooting Steps, Solutions & Fixes

-

Upgrade to JetPack 6.0 GA:

- NVIDIA recommends upgrading to JetPack 6.0 GA, as it’s the production release and has been confirmed to work correctly.

- Use the SDK Manager to install JetPack 6.0 GA: https://docs.nvidia.com/sdk-manager/install-with-sdkm-jetson/index.html

-

Install Compatible TensorFlow Version:

- After upgrading to JetPack 6.0 GA, install TensorFlow 2.15.0+nv24.04:

sudo pip3 install --extra-index-url https://developer.download.nvidia.com/compute/redist/jp/v60 tensorflow==2.15.0+nv24.04

- After upgrading to JetPack 6.0 GA, install TensorFlow 2.15.0+nv24.04:

-

Check for Dependencies:

- Ensure all required dependencies are installed, particularly

libhdf5-dev:sudo apt-get install libhdf5-dev

- Ensure all required dependencies are installed, particularly

-

Monitor Resource Usage:

- Use

nvidia-smiandtopcommands to monitor GPU and CPU usage during execution to identify potential resource constraints.

- Use

-

Verify CUDA and cuDNN Installation:

- Check CUDA and cuDNN versions:

nvcc --version cat /usr/local/cuda/include/cudnn.h | grep CUDNN_MAJOR -A 2 - Ensure they are compatible with the installed TensorFlow version.

- Check CUDA and cuDNN versions:

-

Isolate the Issue:

- Try running a simpler TensorFlow example to determine if the problem is specific to the transfer learning code or a general TensorFlow issue.

-

Check Model Compatibility:

- Verify that the pre-trained model used in the transfer learning example is compatible with the Jetson Orin Nano’s architecture.

-

Use TensorFlow Lite:

- Consider using TensorFlow Lite, which is optimized for edge devices like the Jetson Orin Nano.

-

Report the Issue:

- If the problem persists after upgrading to JetPack 6.0 GA and following these steps, report the issue to NVIDIA’s developer forums or GitHub repository with detailed logs and system information.

-

Consider Alternative Frameworks:

- If TensorFlow continues to be problematic, consider using alternative frameworks like PyTorch or ONNX Runtime, which may have better compatibility with the Jetson platform.

By following these steps, users should be able to resolve the inconsistency issues and achieve expected behavior when running TensorFlow on the Nvidia Jetson Orin Nano Developer Board. If problems persist, it’s recommended to seek further assistance from NVIDIA support or the developer community.