GPU Resources Unavailable in Containerd with K8s on Jetson Orin Nano

Issue Overview

Users deploying applications on Jetson Orin Nano 8G devices using Kubernetes (K8s) and KubeEdge are experiencing difficulties accessing GPU resources within containers. When executing the nvidia-smi command inside a container, no GPU information is displayed, indicating that the container cannot access the GPU resources[1].

Possible Causes

-

Incorrect NVIDIA device plugin configuration: The NVIDIA device plugin may not be properly set up or configured to expose GPU resources to containers in the Kubernetes environment.

-

Containerd runtime configuration issues: The containerd runtime may not be correctly configured to support NVIDIA GPU access.

-

Missing or incompatible drivers: The necessary NVIDIA drivers may be missing, outdated, or incompatible with the current system configuration.

-

Permissions or security constraints: There might be security policies or permission issues preventing containers from accessing GPU resources.

-

KubeEdge configuration: KubeEdge-specific settings may be interfering with GPU resource allocation to containers.

Troubleshooting Steps, Solutions & Fixes

-

Verify NVIDIA Container Toolkit installation:

Ensure that the NVIDIA Container Toolkit is properly installed and configured for containerd:sudo nvidia-ctk runtime configure --runtime=containerd --nvidia-set-as-default sudo systemctl restart containerd -

Check NVIDIA device plugin deployment:

Verify that the NVIDIA device plugin is correctly deployed in your Kubernetes cluster. Use the provided DaemonSet YAML file to deploy or update the plugin[1]. -

Inspect container GPU access:

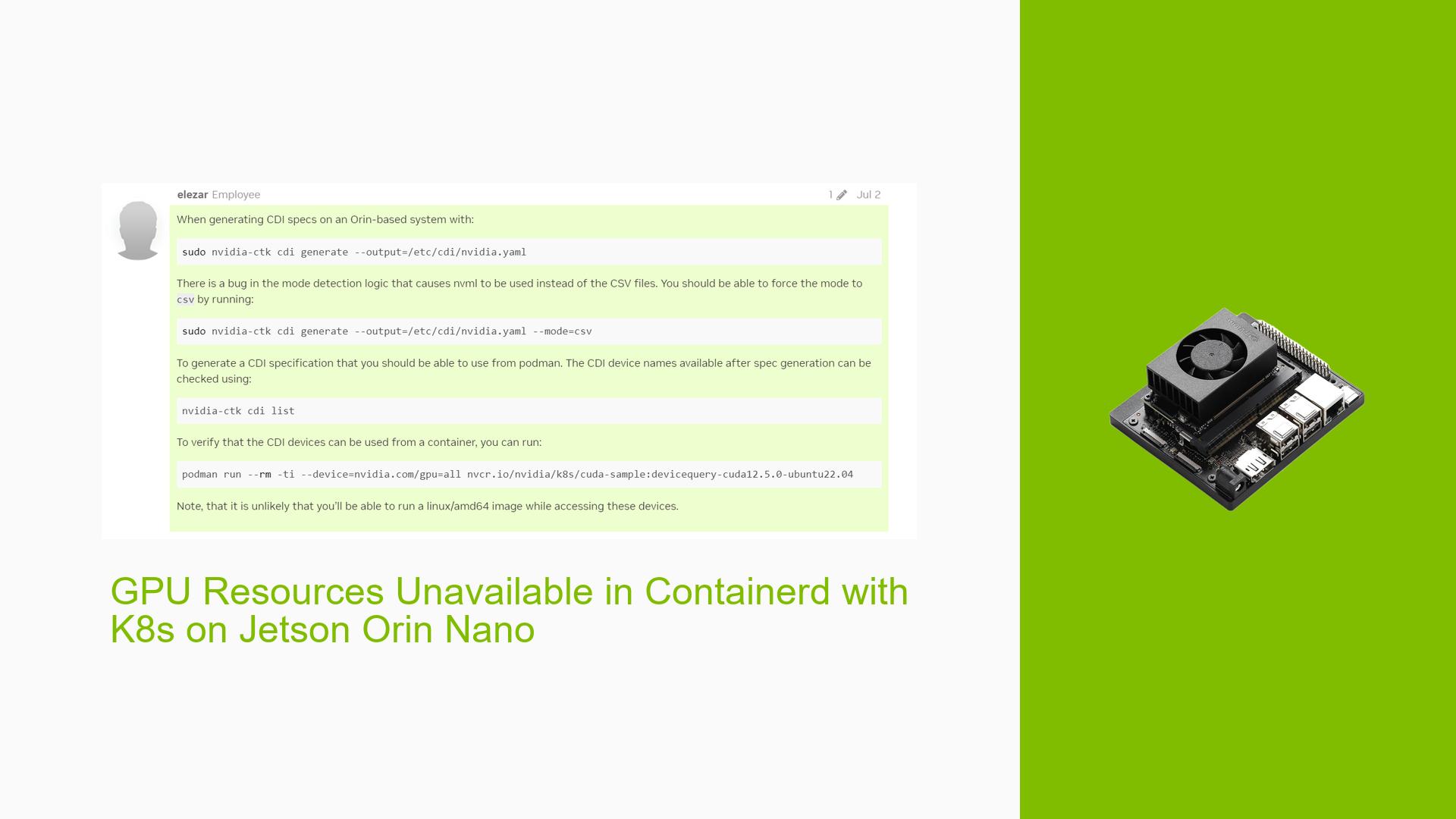

Execute the following command to check if the container can see GPU devices:nvidia-ctk cdi generate --output=/etc/cdi/nvidia.yaml --mode=csv nvidia-ctk cdi listThis should list available GPU devices[1].

-

Update NVIDIA Container Toolkit and GPU Device Plugin:

Ensure you are using the latest versions of the NVIDIA Container Toolkit and GPU Device Plugin (v1.16.1 and v0.16.0 or newer). These versions have integrated the CSV solution, which may resolve your issue. -

Verify NVIDIA driver installation:

Check if NVIDIA drivers are correctly installed on the host system:nvidia-smiIf this command fails or shows no output, reinstall or update the NVIDIA drivers.

-

Examine container runtime logs:

Check containerd logs for any GPU-related errors:sudo journalctl -u containerd -

Review KubeEdge configuration:

Ensure that KubeEdge is configured to allow GPU resource allocation. Check the EdgeCore configuration file for any GPU-related settings. -

Test with a GPU-enabled sample pod:

Deploy a test pod with GPU requirements to isolate whether the issue is specific to your application or a general GPU access problem:apiVersion: v1 kind: Pod metadata: name: gpu-test-pod spec: containers: - name: gpu-test-container image: nvidia/cuda:11.0-base command: ["nvidia-smi"] resources: limits: nvidia.com/gpu: 1 -

Verify node labels:

Ensure that your Jetson Orin Nano nodes are properly labeled to allow GPU scheduling:kubectl label nodes <node-name> nvidia.com/gpu=present -

Check container runtime interface:

Verify that the container runtime interface (CRI) is properly configured to use NVIDIA GPUs. Check the containerd configuration file (usually/etc/containerd/config.toml) for any GPU-related settings.

If the issue persists after trying these steps, consider reaching out to NVIDIA support or the Jetson community forums for more specific assistance, providing detailed information about your setup and the troubleshooting steps you’ve already taken.