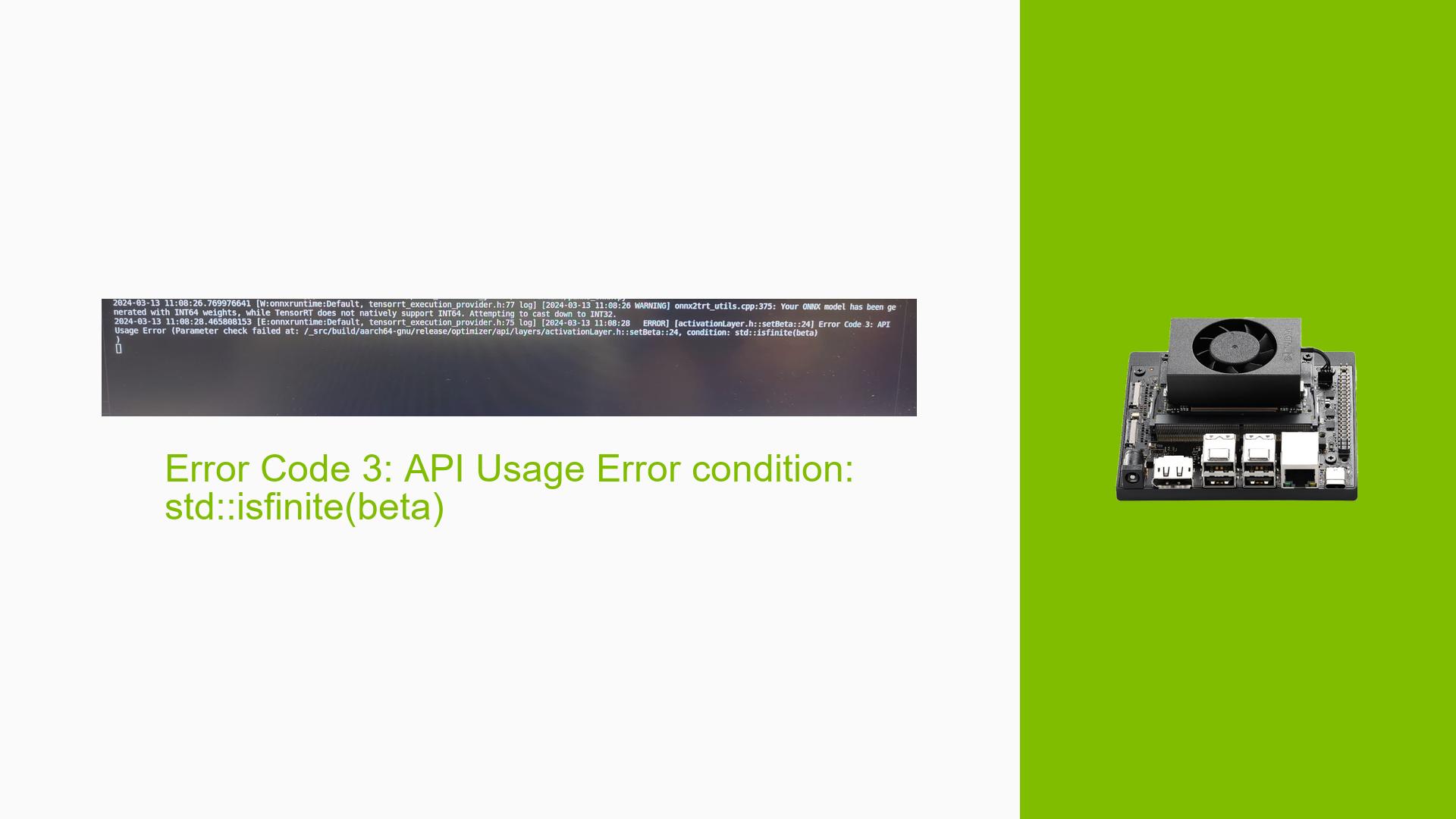

Error Code 3: API Usage Error condition: std::isfinite(beta)

Issue Overview

Users have reported encountering an Error Code 3: API Usage Error condition: std::isfinite(beta) while attempting to run inference on the Nvidia Jetson Orin Nano using the Docker image dustynv/onnxruntime:r35.3.1 on JetPack 5.1.1. The issue arises specifically when executing the model converted from PyTorch to ONNX format, leading to a system freeze characterized by an infinite loop during execution. This problem occurs when utilizing the TensorRT provider in the ONNX Runtime, while the CUDA provider seems to function without issues. The environment specifications include:

- TensorRT Version: 8.5.2.2

- ONNX Runtime Version: 1.16.1

- GPU Type: Orin Nano

- Docker Image: dustynv/onnxruntime:r35.3.1

The issue appears to be consistent across multiple attempts, significantly hindering user experience and functionality, particularly for those relying on TensorRT for optimized inference.

Possible Causes

- Hardware Incompatibilities or Defects: Certain layers in the ONNX model may not be supported by TensorRT, leading to errors during execution.

- Software Bugs or Conflicts: There may be bugs within the TensorRT or ONNX Runtime versions being used, particularly in how they handle specific operations.

- Configuration Errors: Incorrectly set up environments or misconfigured model parameters could lead to runtime errors.

- Driver Issues: Outdated or incompatible drivers for CUDA and TensorRT might cause conflicts during execution.

- Environmental Factors: External factors such as power supply inconsistencies or overheating could affect performance.

- User Errors or Misconfigurations: Improper setup of the inference session or incorrect input data formats could lead to execution failures.

Troubleshooting Steps, Solutions & Fixes

Step-by-Step Instructions

-

Identify Provider Causing Error:

- Run inference with only the TensorRT provider:

sess = ort.InferenceSession('model.onnx', providers=['TensorrtExecutionProvider']) - Run inference with only the CUDA provider:

sess = ort.InferenceSession('model.onnx', providers=['CUDAExecutionProvider'])

- Run inference with only the TensorRT provider:

-

Testing with trtexec:

- Use the

trtexeccommand to test the model directly and check for errors:/usr/src/tensorrt/bin/trtexec --onnx=model.onnx

- Use the

-

Model Compatibility Check:

- Review the TensorRT support matrix to determine if all operators used in your model are supported by TensorRT. If unsupported layers are present, consider modifying the model.

-

Update Drivers and Software:

- Ensure that you are using the latest versions of TensorRT and ONNX Runtime compatible with your JetPack version.

- Update CUDA and cuDNN libraries as necessary.

-

Check for Environmental Issues:

- Verify that your power supply is stable and that the device is not overheating during operation.

-

Debugging Techniques:

- Use logging to capture detailed error messages during inference execution.

- If experiencing freezes, consider running a minimal version of your code to isolate problematic sections.

Recommended Fixes

- If using unsupported operators in your model, consider replacing them with compatible alternatives or simplifying your model architecture.

- For users who found success using only the CUDA provider, it may be advisable to stick with that configuration for now until further updates are available.

Best Practices for Prevention

- Regularly update your software stack (JetPack, TensorRT, ONNX Runtime) to leverage improvements and bug fixes.

- Thoroughly test models on supported platforms before deploying on Jetson devices.

- Maintain a backup of working configurations and models to facilitate quick recovery from issues.

Unresolved Aspects

Further investigation may be required into specific operators within user models that are causing compatibility issues with TensorRT, as well as potential updates from Nvidia regarding support for these operations.

By following these steps and recommendations, users should be able to diagnose and potentially resolve issues related to Error Code 3 on their Jetson Orin Nano devices effectively.