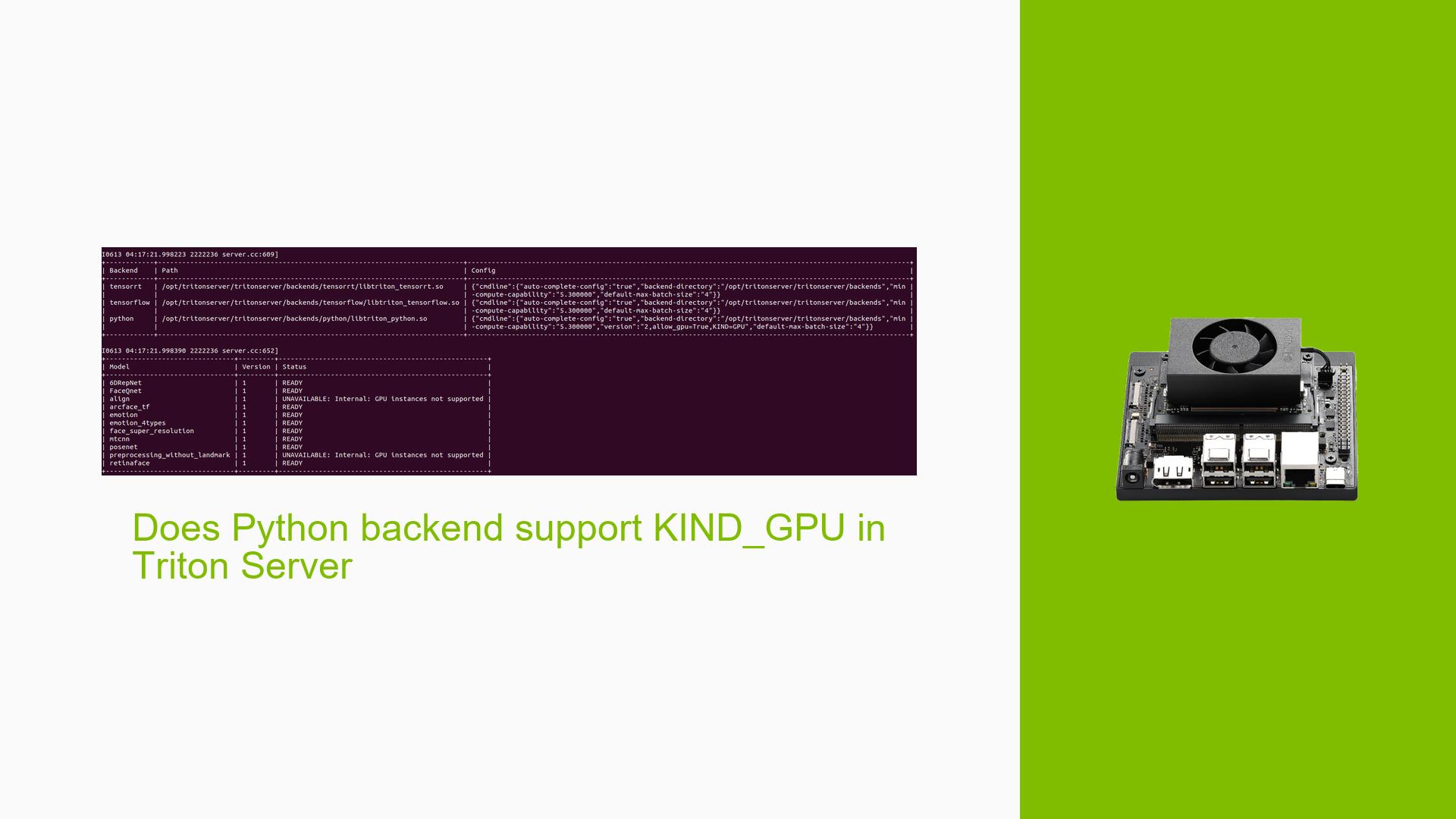

Does Python backend support KIND_GPU in Triton Server

Issue Overview

Users are experiencing difficulties when attempting to run Triton Server on the Nvidia Jetson Orin Nano (16GB). The primary symptom reported is an error message stating "GPU instances not supported" when trying to load specific models that utilize a Python backend. This issue arises during the initial setup of Triton Server, particularly when attempting to load models configured to use GPU resources.

The context of the problem involves two specific models that remain "unready" and fail to load unless their instance group is changed from KIND_GPU to KIND_CPU. This indicates that the models are not compatible with GPU execution under the current configuration.

The impact of this issue significantly hampers the user experience, as it restricts the use of GPU capabilities, which are essential for optimizing performance on the Jetson Orin Nano. Users have expressed frustration as they seek solutions to enable full GPU support for all models.

Possible Causes

-

Python Backend Limitations: The Python backend in Triton Server does not support GPU tensors and asynchronous batch loading (Async BLS). This limitation may prevent certain models from utilizing GPU resources effectively.

-

Model Configuration Issues: Incorrect configurations in the model’s

config.pbtxtfile may lead to compatibility issues with GPU execution. Users have reported changing instance groups without resolving the underlying problem. -

GPU Tensor Usage: If GPU tensors are utilized in the Python models, this could lead to incompatibility with the current backend settings, resulting in errors.

-

Environment Variables: The variable

FORCE_CPU_ONLY_INPUT_TENSORSmay not be set correctly. This variable determines whether input tensors should be processed on the CPU or GPU, affecting model loading behavior. -

Driver or Software Bugs: There may be bugs or conflicts within the Triton Server software or its dependencies that could cause these issues.

Troubleshooting Steps, Solutions & Fixes

-

Check Model Configuration:

- Open the

config.pbtxtfile for the problematic models. - Ensure that the instance group is set correctly. If using

KIND_GPU, verify that all parameters align with GPU requirements.

- Open the

-

Verify Tensor Usage:

- Determine whether GPU tensors are being used in your Python models. This can be done by reviewing your model code and checking for any tensor operations that explicitly require GPU resources.

-

Modify Environment Variables:

- Set the environment variable

FORCE_CPU_ONLY_INPUT_TENSORSto "yes". This can help in forcing the model to use CPU-only input tensors. - Example command to set this variable before starting Triton Server:

export FORCE_CPU_ONLY_INPUT_TENSORS=yes

- Set the environment variable

-

Testing with Different Configurations:

- If changing

FORCE_CPU_ONLY_INPUT_TENSORSdoes not resolve the issue, try switching back toKIND_CPUfor testing purposes. - Run a simplified version of your model without any complex dependencies to isolate the problem.

- If changing

-

Gather System Information:

- Use commands such as

nvidia-smiandjetson_clocksto check if your GPU is recognized and functioning properly. - Collect logs from Triton Server for further diagnostics.

- Use commands such as

-

Review Documentation:

- Consult the official Triton Inference Server documentation regarding Jetson compatibility and Python backend limitations.

- Relevant documentation can be found at:

-

Community Support:

- If issues persist, consider sharing your model files and detailed steps taken during setup on community forums or GitHub issues for further assistance from developers or other users who may have faced similar problems.

-

Unresolved Aspects:

- Some users reported that despite following troubleshooting steps, they continued facing issues without clear resolution paths provided by community responses.

- Further investigation into specific model configurations or potential updates from Nvidia regarding backend support may be necessary.

By following these steps, users can systematically diagnose and potentially resolve issues related to running Triton Server on Nvidia Jetson Orin Nano with Python backend support for GPU instances.