Docker and NVIDIA L4T TensorRT Image Setup for Jetson Orin Nano

Issue Overview

Users of the NVIDIA Jetson Orin Nano Developer Kit are encountering challenges when transitioning from a virtual environment to Docker container deployments. The primary symptoms include confusion over whether the existing Docker installation suffices, which NVIDIA L4T TensorRT image to pull that aligns with their installed versions of TensorRT and CUDA, and concerns regarding the NVIDIA Container Toolkit (NCT) installation. The issue arises during the setup phase, particularly when users attempt to configure their development environments for computer vision models. Users have reported varying degrees of success in resolving these issues, with some expressing frustration over the complexity of the process.

Context:

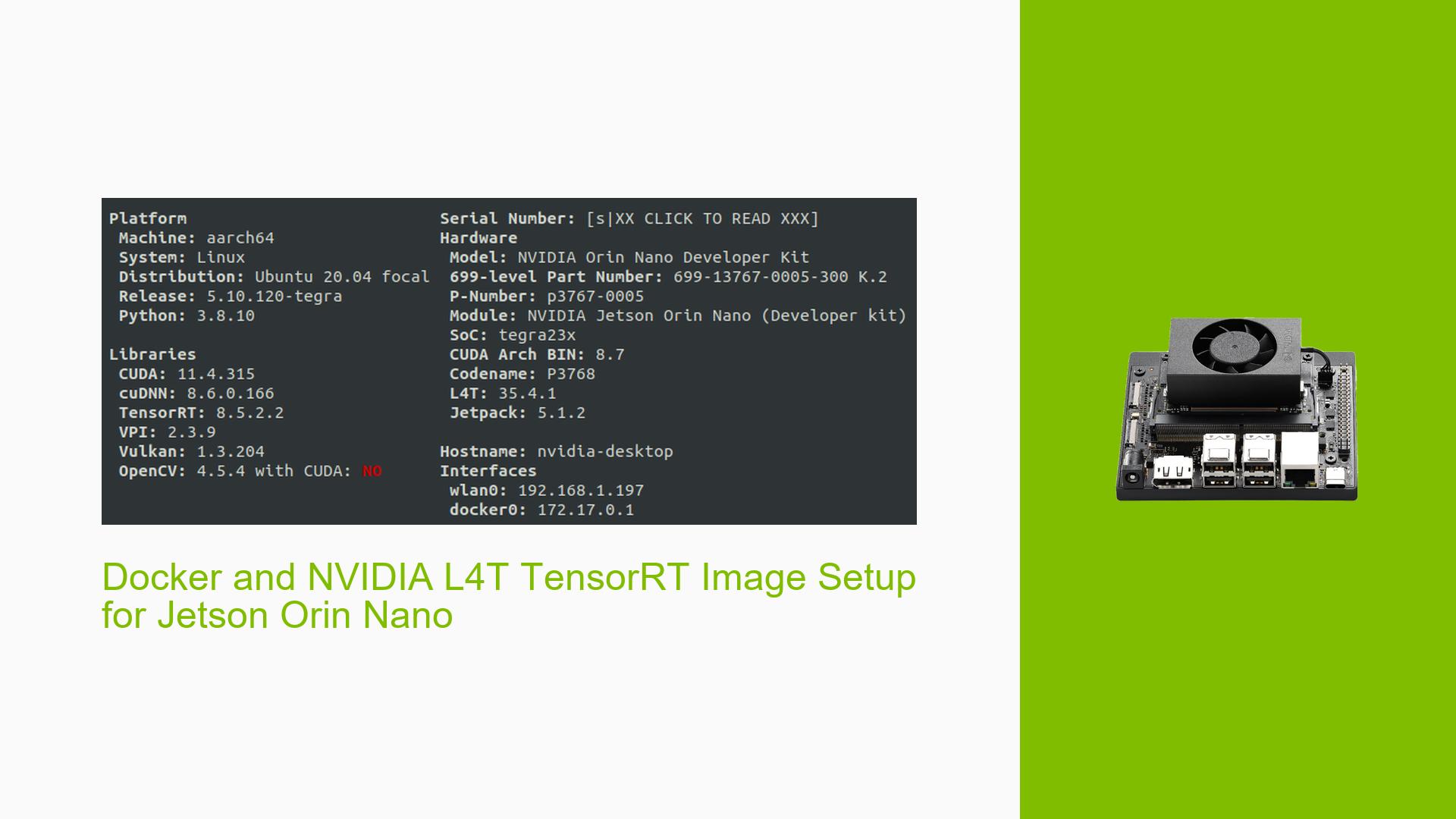

- Hardware: Jetson Orin Nano Dev 8 GB

- Software: JetPack 5.1.2, TensorRT 8.5.2.2, CUDA 11.4.315

- Frequency: Issues are frequently reported by users transitioning to Docker.

- Impact: Difficulty in setting up a functional development environment impacts productivity and user experience.

Possible Causes

-

Docker Installation Issues:

- Users may not be aware that their existing Docker installation is adequate or if a new installation is necessary.

-

TensorRT Image Confusion:

- Uncertainty about which TensorRT image (runtime vs. development) to use based on their application needs.

-

NVIDIA Container Toolkit (NCT) Compatibility:

- Questions around whether the pre-installed NCT version is sufficient or if an update is required after flashing JetPack.

-

Missing Compiler Tools:

- The absence of

nvccin the runtime image can lead to confusion regarding development capabilities.

- The absence of

-

Cross-Platform Development Concerns:

- Users are unsure about building Docker images on non-NVIDIA hardware (e.g., Windows machines without GPUs).

Troubleshooting Steps, Solutions & Fixes

Step-by-Step Instructions

-

Verify Docker Installation:

- Run the command:

docker --version - Confirm that Docker version 24.0.5 is installed as reported.

- Run the command:

-

Choosing the Right TensorRT Image:

- For applications that require only execution, use:

docker pull l4t-tensorrt:r8.5.2-runtime - For development purposes (to access headers and compilers), use:

docker pull l4t-tensorrt:r8.5.2.2-devel

- For applications that require only execution, use:

-

Check NVIDIA Container Toolkit Installation:

- Verify if NCT is installed with:

sudo apt show nvidia-container-toolkit - If installed with JetPack 5.1.2, it should be sufficient; no further installation is needed.

- Verify if NCT is installed with:

-

Using nvcc:

- If

nvccis not found in the runtime image, switch to the development image as it contains necessary compiler tools.

- If

-

Building Docker Images on Non-NVIDIA Machines:

- It is not officially supported to build l4t-based images on non-NVIDIA hardware (e.g., Windows). It’s recommended to build directly on the Jetson device or use a Linux machine with an NVIDIA GPU.

Additional Recommendations

- Ensure all software dependencies are up-to-date by checking for updates in JetPack.

- Refer to the official NVIDIA documentation for detailed instructions on Docker and NCT setup:

Best Practices for Future Prevention

- Regularly update all software components (Docker, JetPack, TensorRT).

- Maintain documentation of your setup process for future reference.

- Engage with community forums for shared experiences and solutions.

Unresolved Aspects

- Users still express uncertainty regarding specific configurations and best practices for cross-platform development.

- Further clarification may be needed on optimal setups for various application scenarios involving different TensorRT images.

This document serves as a comprehensive guide to troubleshoot and resolve common issues faced by users transitioning to Docker on the Jetson Orin Nano platform, ensuring a smoother development experience moving forward.