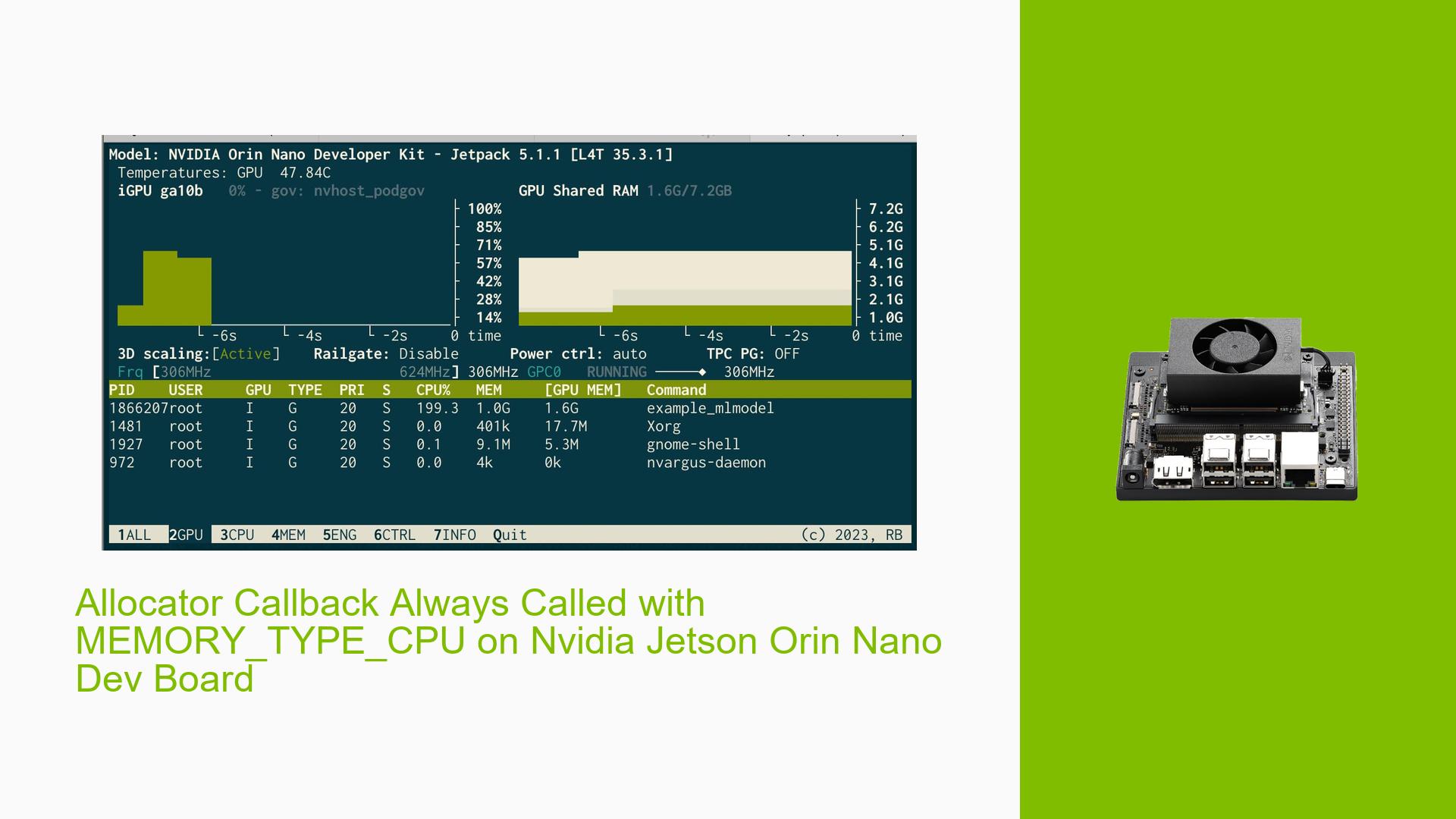

Allocator Callback Always Called with MEMORY_TYPE_CPU on Nvidia Jetson Orin Nano Dev Board

Issue Overview

Users are experiencing a consistent issue where the allocation callback in Triton Server’s in-process API is always invoked with TRITONSERVER_MEMORY_CPU as the memory type, regardless of the expected behavior. This occurs during the execution of models on the Jetson Orin Nano Dev Kit, which is running the latest Jetson Linux and Jetpack versions. While GPU memory utilization can be confirmed through tools like jtop, users are puzzled as to why the allocation callback does not reflect this. The problem appears to be model-dependent, potentially influenced by the backend being used (e.g., TensorFlow). Users report that all output tensor allocations return as CPU memory, raising concerns about configuration or implementation errors.

Possible Causes

- Backend Limitations: Some backends may only support CPU tensor allocations on the Jetson platform, leading to the observed behavior.

- Configuration Errors: Users may have misconfigured settings or overlooked setup functions that dictate memory preferences.

- Model-Specific Behavior: Certain models might inherently prefer CPU memory for output tensors, regardless of GPU usage.

- Driver Issues: Incompatibilities or bugs in the drivers could result in improper handling of memory types.

- Environmental Factors: Power supply issues or thermal conditions might affect performance and allocation behaviors.

Troubleshooting Steps, Solutions & Fixes

-

Confirm GPU Memory Usage:

- Use

jtopto monitor GPU memory usage. Ensure that your process is listed under the GPU tab and check for active memory consumption. - Consider using alternative tools such as

nvidia-smifor more detailed insights into GPU utilization.

- Use

-

Check Backend Compatibility:

- Verify which backend you are using (e.g., TensorFlow) and consult documentation to determine if it supports GPU tensor allocations on Jetson devices.

-

Review Allocation Callback Implementation:

- Ensure your allocation callback is correctly implemented according to examples provided in Triton Server documentation.

- Check if you have set up a query function using

TRITONSERVER_ResponseAllocatorSetQueryFunctionand confirm whether it is being called.

-

Modify Memory Type Preferences:

- Investigate if there are additional setup functions or configurations that allow you to specify preferred memory types for tensor allocations.

-

Test with Different Models:

- Experiment with various models or configurations to determine if the issue persists across different setups.

-

Driver Updates:

- Ensure that your Jetson device has the latest drivers installed. Use NVIDIA’s SDK Manager to check for updates and apply them as necessary.

-

Consult Documentation:

- Refer to the official Triton Server user guide and Jetson documentation for any updates or notes regarding memory management and backend compatibility.

-

Community Support:

- Engage with community forums or support channels for shared experiences and potential solutions from other developers facing similar issues.

-

Code Snippet Example:

TRITONSERVER_ResponseAllocatorNew(&allocator); TRITONSERVER_ResponseAllocatorSetQueryFunction(allocator, my_query_function); -

Best Practices:

- Regularly update your software environment and dependencies.

- Document any changes made during troubleshooting for future reference.

- Test configurations in a controlled manner to isolate variables affecting performance.

This structured approach should assist users in diagnosing and resolving issues related to memory allocation callbacks on the Nvidia Jetson Orin Nano Dev board while using Triton Server’s in-process API.